一、ELK搭建篇

官网地址:https://www.elastic.co/cn/

官网权威指南:https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

安装指南:https://www.elastic.co/guide/en/elasticsearch/reference/5.x/rpm.html

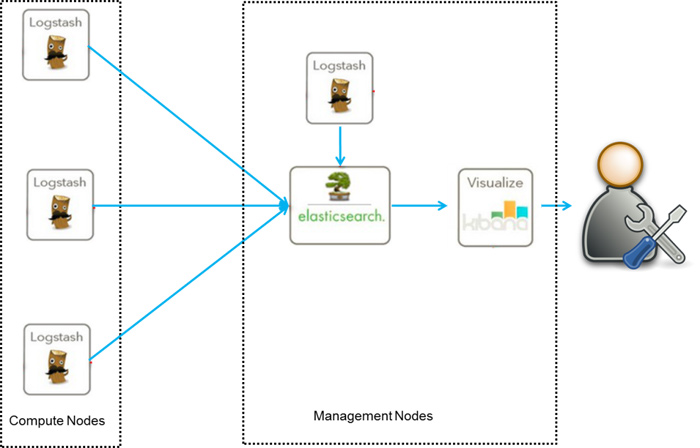

ELK是Elasticsearch、Logstash、Kibana的简称,这三者是核心套件,但并非全部。

Elasticsearch是实时全文搜索和分析引擎,提供搜集、分析、存储数据三大功能;是一套开放REST和JAVA API等结构提供高效搜索功能,可扩展的分布式系统。它构建于Apache Lucene搜索引擎库之上。

Logstash是一个用来搜集、分析、过滤日志的工具。它支持几乎任何类型的日志,包括系统日志、错误日志和自定义应用程序日志。它可以从许多来源接收日志,这些来源包括 syslog、消息传递(例如 RabbitMQ)和JMX,它能够以多种方式输出数据,包括电子邮件、websockets和Elasticsearch。

Kibana是一个基于Web的图形界面,用于搜索、分析和可视化存储在 Elasticsearch指标中的日志数据。它利用Elasticsearch的REST接口来检索数据,不仅允许用户创建他们自己的数据的定制仪表板视图,还允许他们以特殊的方式查询和过滤数据

环境

-

-

IP:192.168.1.202 安装: elasticsearch、logstash、Kibana、Nginx、Http、Redis

-

192.168.1.201 安装: logstash

安装

-

安装elasticsearch的yum源的密钥(这个需要在所有服务器上都配置)

-

# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

-

-

-

# vim /etc/yum.repos.d/elasticsearch.repo

-

-

在elasticsearch.repo文件中添加如下内容

-

-

name=Elasticsearch repository for 5.x packages

-

baseurl=https://artifacts.elastic.co/packages/5.x/yum

-

-

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

-

-

-

安装elasticsearch的环境

-

-

# yum install -y elasticsearch

-

-

安装java环境(java环境必须是1.8版本以上的)

-

-

wget http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.rpm

-

-

rpm -ivh jdk-8u131-linux-x64.rpm

-

-

-

-

-

Java(TM) SE Runtime Environment (build 1.8.0_131-b11)

-

Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode)

创建elasticsearch data的存放目录,并修改该目录的属主属组

-

# mkdir -p /data/es-data (自定义用于存放data数据的目录)

-

# chown -R elasticsearch:elasticsearch /data/es-data

修改elasticsearch的日志属主属组

# chown -R elasticsearch:elasticsearch /var/log/elasticsearch/

修改elasticsearch的配置文件

-

# vim /etc/elasticsearch/elasticsearch.yml

-

-

找到配置文件中的cluster.name,打开该配置并设置集群名称

-

-

-

找到配置文件中的node.name,打开该配置并设置节点名称

-

-

-

-

-

-

-

path.logs: /var/log/elasticsearch/

-

-

-

bootstrap.memory_lock: true

-

-

-

-

-

-

-

-

增加新的参数,这样head插件可以访问es (5.x版本,如果没有可以自己手动加)

-

-

http.cors.allow-origin: "*"

-

启动服务

-

/etc/init.d/elasticsearch start

-

-

Starting elasticsearch: Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x0000000085330000, 2060255232, 0) failed; error='Cannot allocate memory' (errno=12)

-

-

# There is insufficient memory for the Java Runtime Environment to continue.

-

# Native memory allocation (mmap) failed to map 2060255232 bytes for committing reserved memory.

-

# An error report file with more information is saved as:

-

# /tmp/hs_err_pid2616.log

-

-

这个报错是因为默认使用的内存大小为2G,虚拟机没有那么多的空间

-

-

-

vim /etc/elasticsearch/jvm.options

-

-

-

-

-

/etc/init.d/elasticsearch start

-

-

查看服务状态,如果有报错可以去看错误日志 less /var/log/elasticsearch/demon.log(日志的名称是以集群名称命名的)

-

-

-

# chkconfig elasticsearch on

注意事项

-

-

-

vim /etc/security/limits.conf

-

在末尾追加以下内容(elk为启动用户,当然也可以指定为*)

-

-

-

-

-

elk soft memlock unlimited

-

elk hard memlock unlimited

-

-

-

vim /etc/security/limits.d/90-nproc.conf

-

将里面的1024改为2048(ES最少要求为2048)

-

-

-

另外还需注意一个问题(在日志发现如下内容,这样也会导致启动失败,这一问题困扰了很久)

-

[2017-06-14T19:19:01,641][INFO ][o.e.b.BootstrapChecks ] [elk-1] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks

-

[2017-06-14T19:19:01,658][ERROR][o.e.b.Bootstrap ] [elk-1] node validation exception

-

[1] bootstrap checks failed

-

[1]: system call filters failed to install; check the logs and fix your configuration or disable system call filters at your own risk

-

-

解决:修改配置文件,在配置文件添加一项参数(目前还没明白此参数的作用)

-

vim /etc/elasticsearch/elasticsearch.yml

-

bootstrap.system_call_filter: false

通过浏览器请求下9200的端口,看下是否成功

-

-

-

tcp 0 0 :::9200 :::* LISTEN 2934/java

-

-

-

# curl http://127.0.0.1:9200/

-

-

-

"cluster_name" : "demon",

-

"cluster_uuid" : "kM0GMFrsQ8K_cl5Fn7BF-g",

-

-

-

"build_hash" : "780f8c4",

-

"build_date" : "2017-04-28T17:43:27.229Z",

-

"build_snapshot" : false,

-

"lucene_version" : "6.5.0"

-

-

"tagline" : "You Know, for Search"

-

如何和elasticsearch交互

-

-

-

-

Javascript,.Net,PHP,Perl,Python

-

-

-

# curl -i -XGET 'localhost:9200/_count?pretty'

-

-

content-type: application/json; charset=UTF-8

-

-

-

-

-

-

-

-

-

-

安装插件

-

-

-

安装docker镜像或者通过github下载elasticsearch-head项目都是可以的,1或者2两种方式选择一种安装使用即可

-

-

1. 使用docker的集成好的elasticsearch-head

-

# docker run -p 9100:9100 mobz/elasticsearch-head:5

-

-

docker容器下载成功并启动以后,运行浏览器打开http://localhost:9100/

-

-

2. 使用git安装elasticsearch-head

-

-

# git clone git://github.com/mobz/elasticsearch-head.git

-

-

-

-

-

-

-

LogStash的使用

-

-

-

-

https://www.elastic.co/guide/en/logstash/current/installing-logstash.html

-

-

-

# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

-

-

-

# yum install -y logstash

-

-

-

-

-

创建一个软连接,每次执行命令的时候不用在写安装路劲(默认安装在/usr/share下)

-

ln -s /usr/share/logstash/bin/logstash /bin/

-

-

-

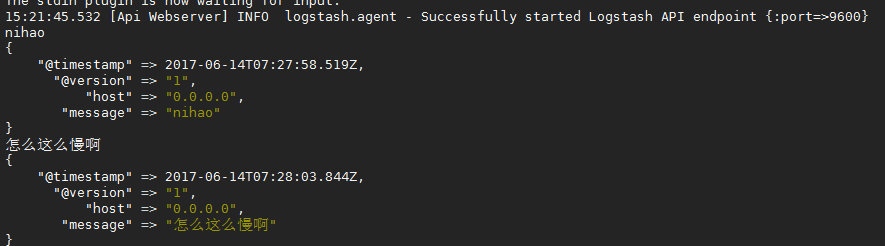

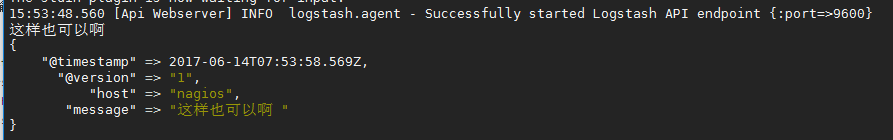

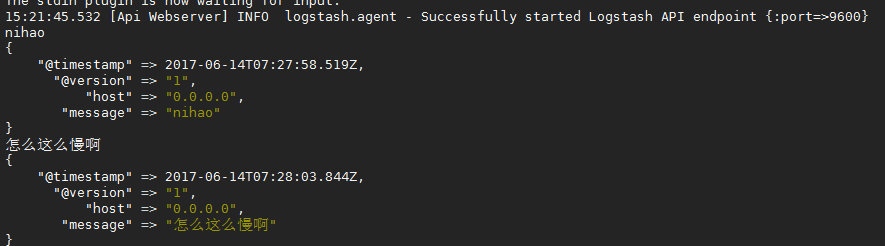

# logstash -e 'input { stdin { } } output { stdout {} }'

-

-

-

-

-

-

-

-

-

-

-

-

-

-

# logstash -e 'input { stdin { } } output { stdout {codec => rubydebug} }'

-

-

-

-

-

-

如果标准输出还有elasticsearch中都需要保留应该怎么玩,看下面

-

# /usr/share/logstash/bin/logstash -e 'input { stdin { } } output { elasticsearch { hosts => ["192.168.1.202:9200"] } stdout { codec => rubydebug }}'

-

-

-

-

-

logstash使用配置文件

-

-

https://www.elastic.co/guide/en/logstash/current/configuration.html

-

-

-

# vim /etc/logstash/conf.d/elk.conf

-

-

-

-

-

elasticsearch { hosts => ["192.168.1.202:9200"] }

-

stdout { codec => rubydebug }

-

-

-

-

-

-

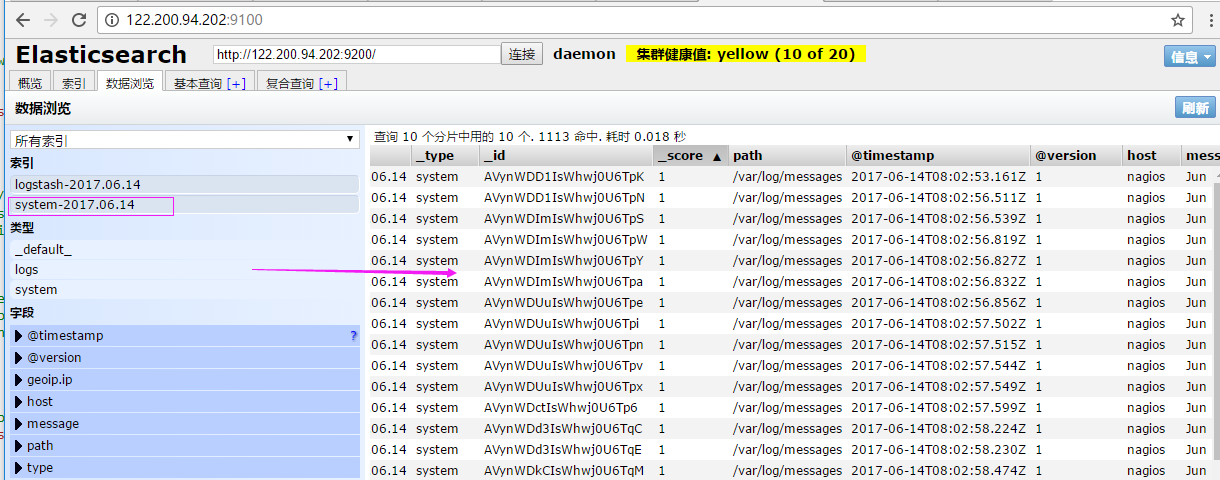

logstash的数据库类型

-

-

权威指南:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

-

-

-

# vim /etc/logstash/conf.d/elk.conf

-

-

-

-

-

path => "/var/log/messages"

-

-

start_position => "beginning"

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "system-%{+YYYY.MM.dd}"

-

-

-

-

-

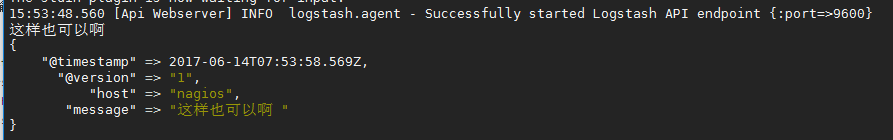

运行logstash指定elk.conf配置文件,进行过滤匹配

-

#logstash -f /etc/logstash/conf.d/elk.conf

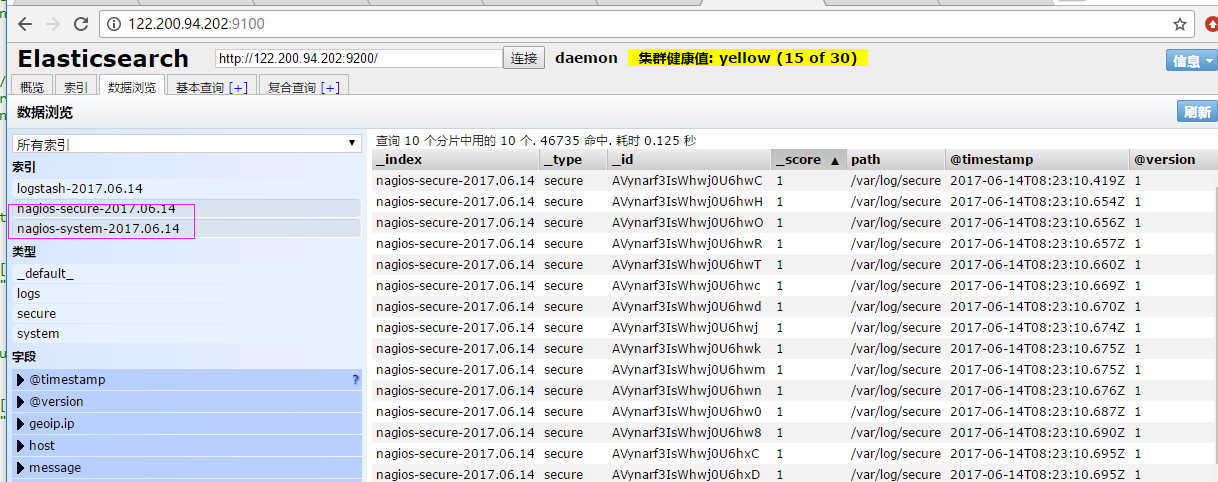

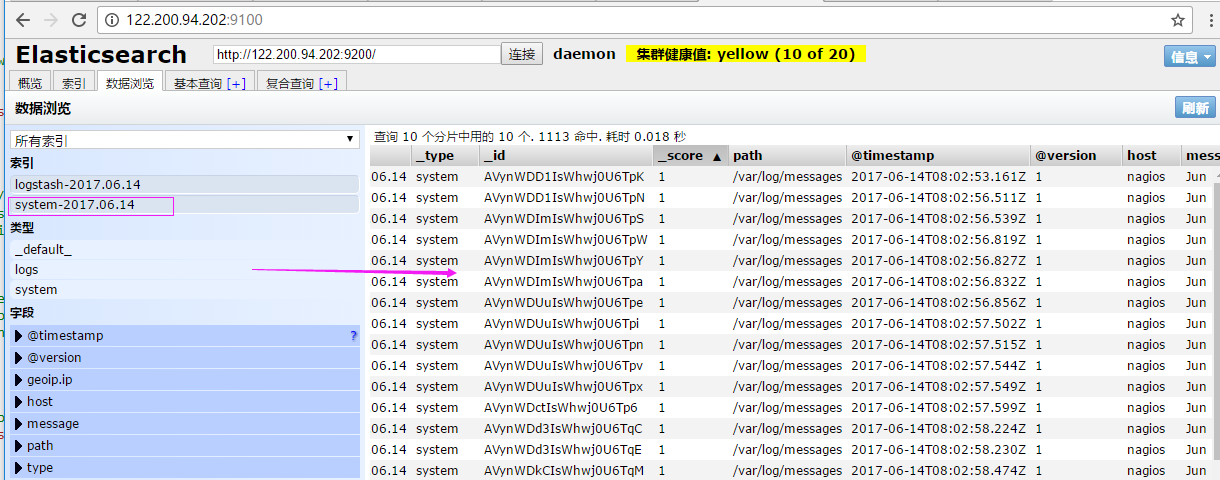

来一发配置安全日志的并且把日志的索引按类型做存放,继续编辑elk.conf文件

-

# vim /etc/logstash/conf.d/elk.conf

-

-

-

-

-

path => "/var/log/messages"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/secure"

-

-

start_position => "beginning"

-

-

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-system-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-secure-%{+YYYY.MM.dd}"

-

-

-

-

-

运行logstash指定elk.conf配置文件,进行过滤匹配

-

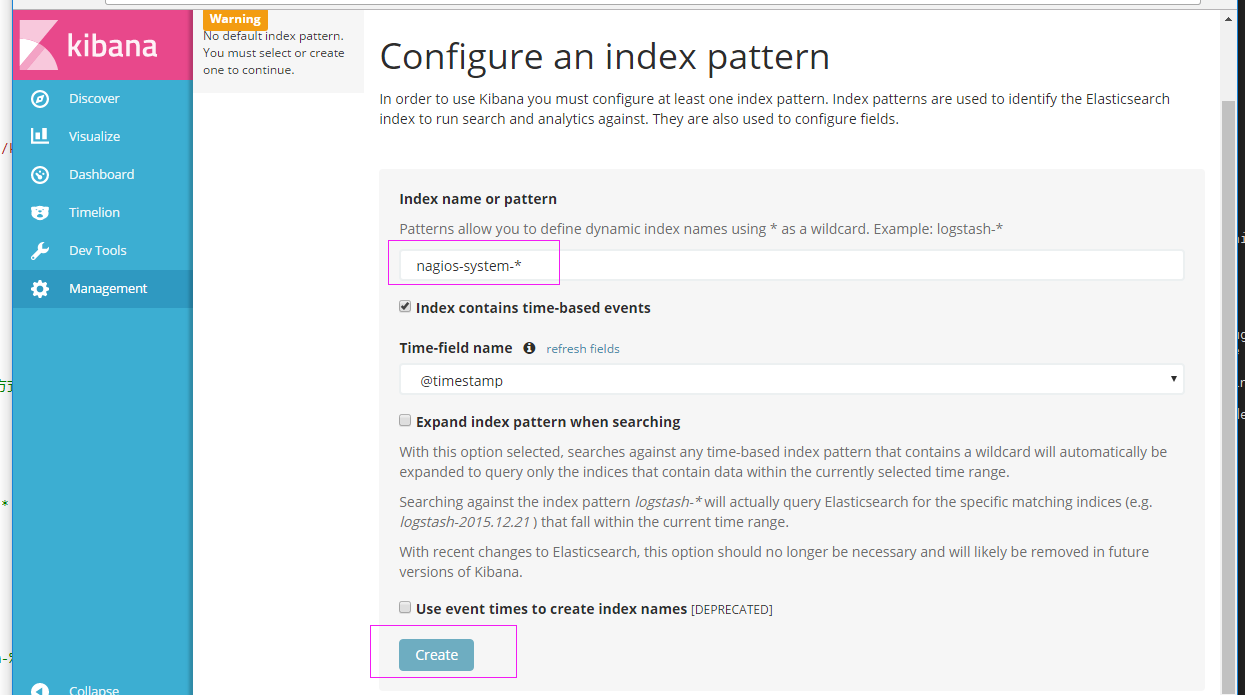

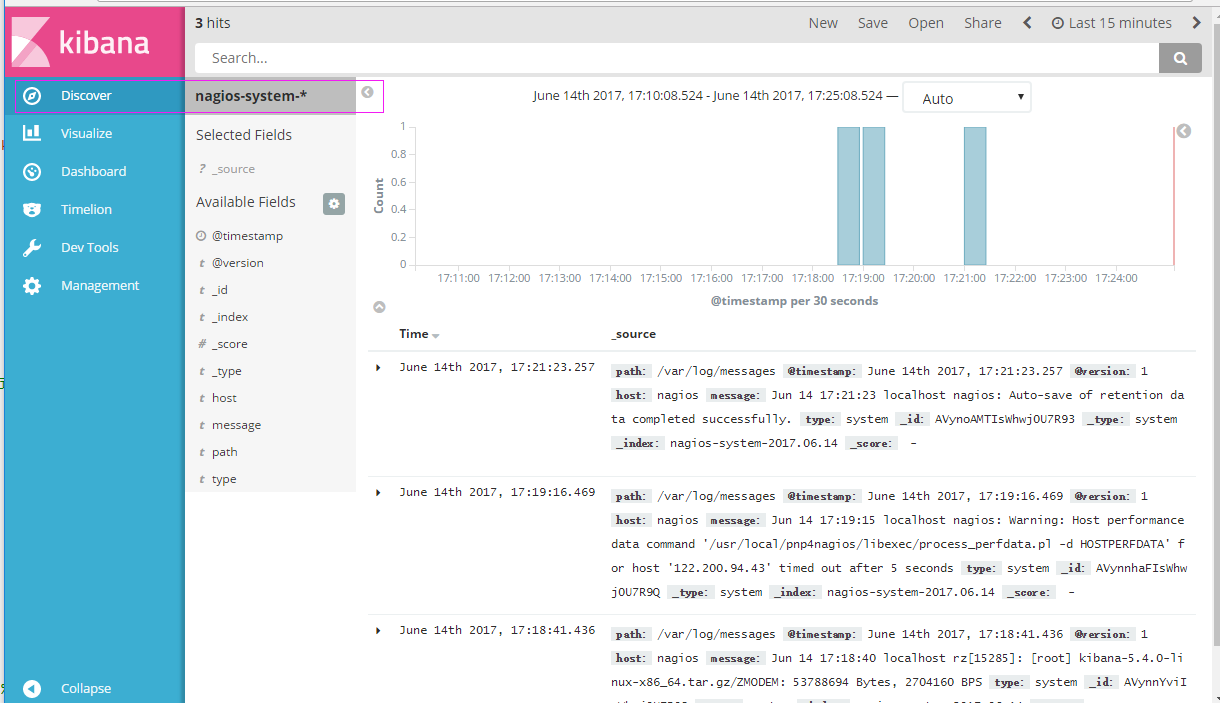

这些设置都没有问题之后,接下来安装下kibana,可以让在前台展示

Kibana的安装及使用

-

-

-

官方安装手册:https://www.elastic.co/guide/en/kibana/current/install.html

-

-

-

# wget https://artifacts.elastic.co/downloads/kibana/kibana-5.4.0-linux-x86_64.tar.gz

-

-

-

# tar -xzf kibana-5.4.0-linux-x86_64.tar.gz

-

-

-

# mv kibana-5.4.0-linux-x86_64 /usr/local

-

-

-

# ln -s /usr/local/kibana-5.4.0-linux-x86_64/ /usr/local/kibana

-

-

-

# vim /usr/local/kibana/config/kibana.yml

-

-

-

-

-

-

-

elasticsearch.url: "http://192.168.1.202:9200"

-

-

-

-

安装screen,以便于kibana在后台运行(当然也可以不用安装,用其他方式进行后台启动)

-

-

-

-

-

# /usr/local/kibana/bin/kibana

-

-

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 17007/node

-

-

-

二、ELK实战篇

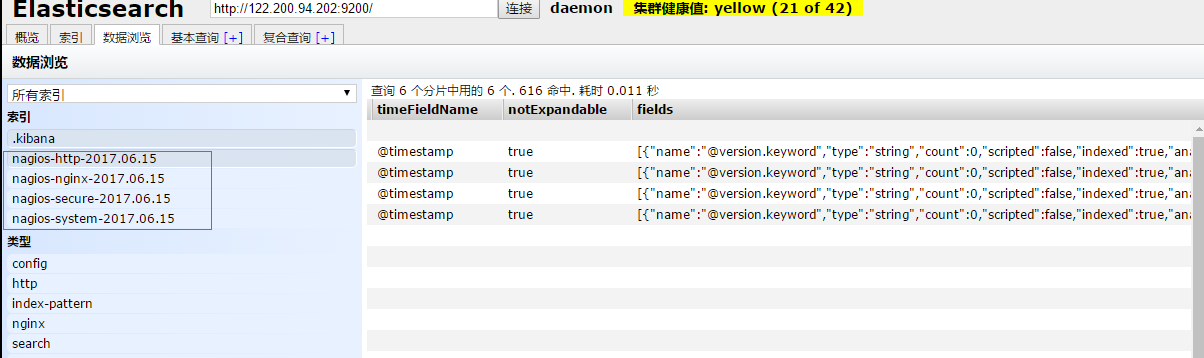

好,现在索引也可以创建了,现在可以来输出nginx、apache、message、secrue的日志到前台展示(Nginx有的话直接修改,没有自行安装)

-

编辑nginx配置文件,修改以下内容(在http模块下添加)

-

-

log_format json '{"@timestamp":"$time_iso8601",'

-

-

'"client":"$remote_addr",'

-

-

-

-

-

'"size":"$body_bytes_sent",'

-

'"responsetime":"$request_time",'

-

'"referer":"$http_referer",'

-

'"ua":"$http_user_agent"'

-

-

-

修改access_log的输出格式为刚才定义的json

-

access_log logs/elk.access.log json;

-

-

-

-

-

\"@timestamp\": \"%{%Y-%m-%dT%H:%M:%S%z}t\", \

-

-

-

\"message\": \"%h %l %u %t \\\"%r\\\" %>s %b\", \

-

-

-

-

-

-

-

-

-

\"site\": \"%{Host}i\", \

-

\"referer\": \"%{Referer}i\", \

-

\"useragent\": \"%{User-agent}i\" \

-

-

-

-

CustomLog logs/access_log ls_apache_json

-

-

-

vim /etc/logstash/conf.d/full.conf

-

-

-

-

path => "/var/log/messages"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/secure"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/httpd/access_log"

-

-

start_position => "beginning"

-

-

-

-

path => "/usr/local/nginx/logs/elk.access.log"

-

-

start_position => "beginning"

-

-

-

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-system-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-secure-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-http-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-nginx-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

logstash -f /etc/logstash/conf.d/full.conf

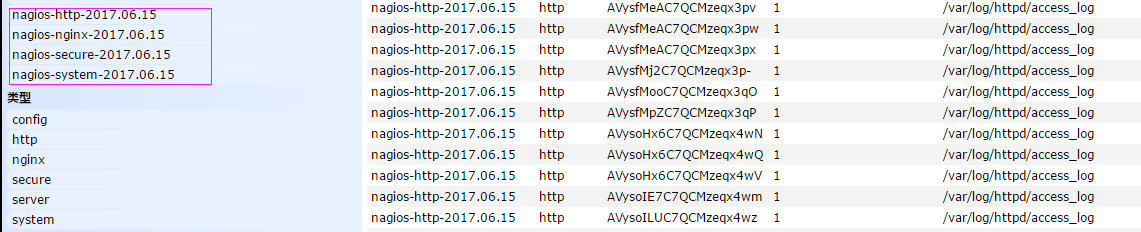

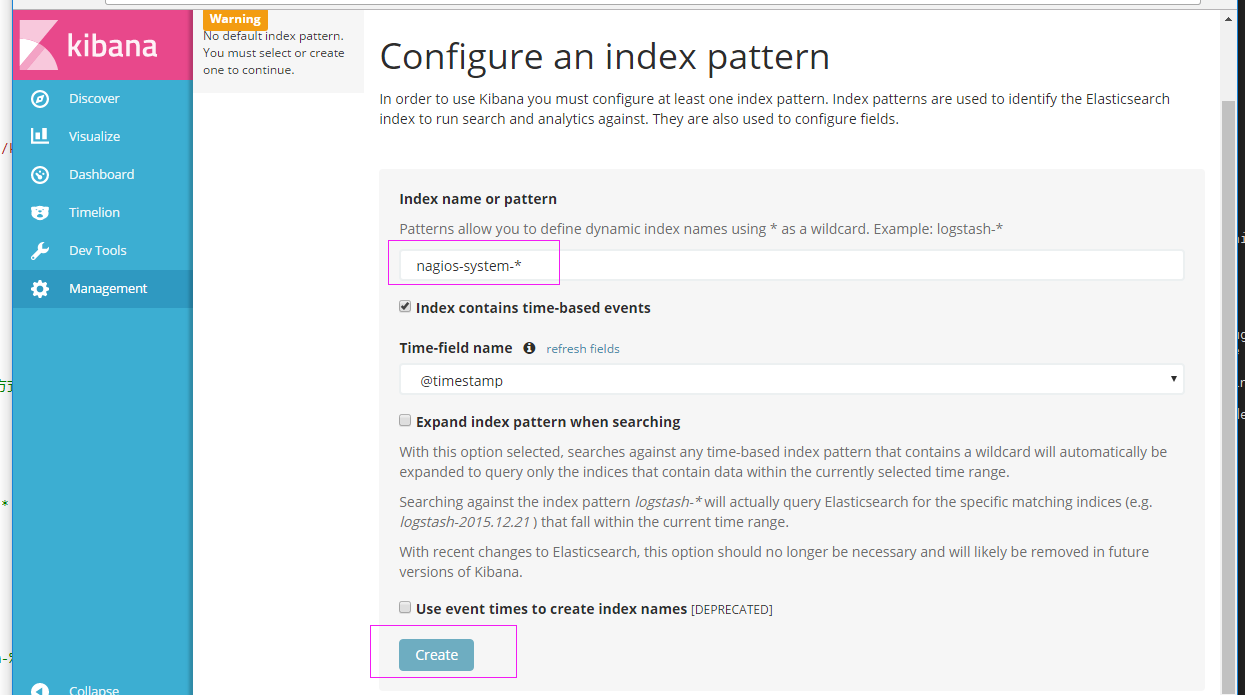

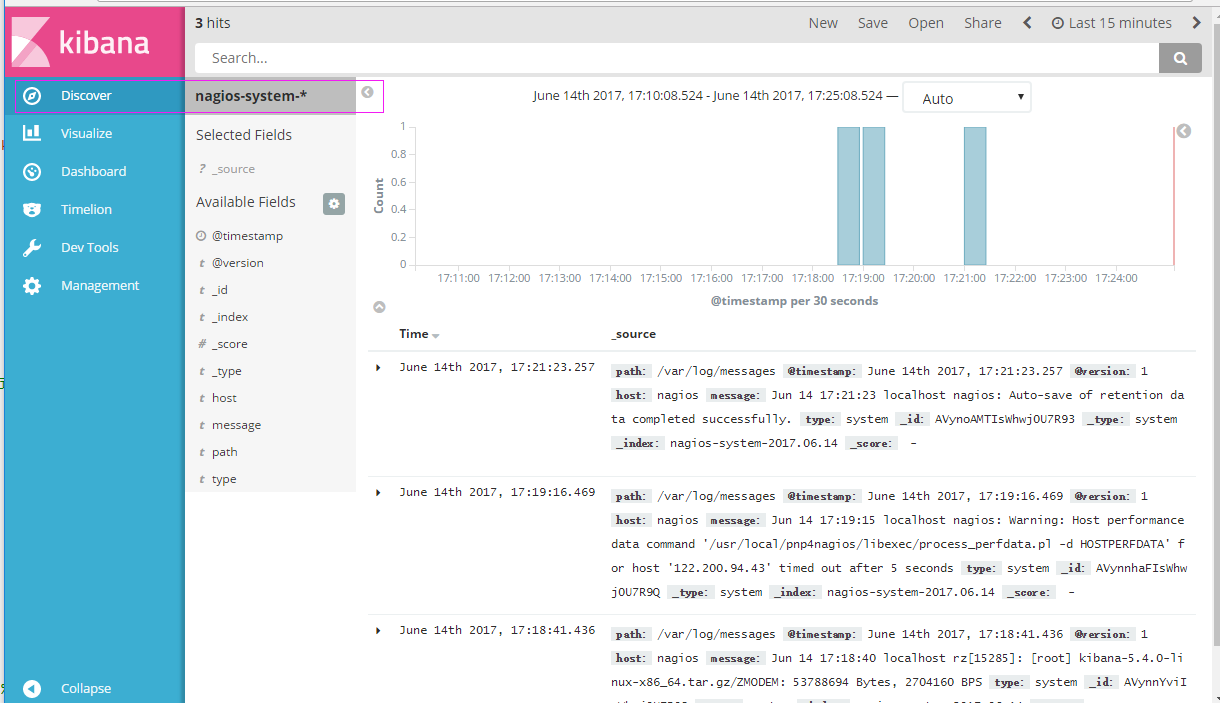

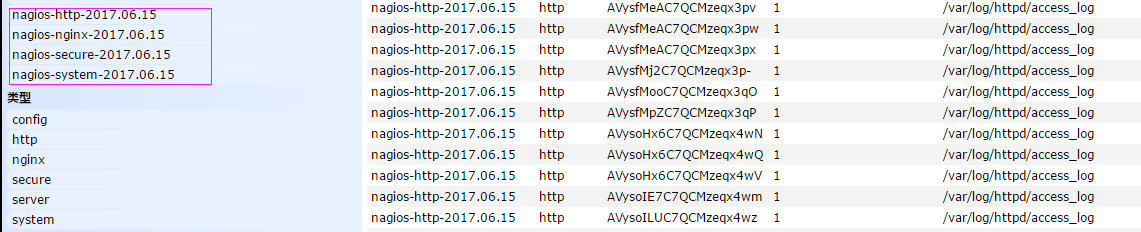

可以发现所有创建日志的索引都已存在,接下来就去Kibana创建日志索引,进行展示(按照上面的方法进行创建索引即可),看下展示的效果

接下来再来一发MySQL慢日志的展示

-

由于MySQL的慢日志查询格式比较特殊,所以需要用正则进行匹配,并使用multiline能够进行多行匹配(看具体配置)

-

-

-

path => "/var/log/messages"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/secure"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/httpd/access_log"

-

-

start_position => "beginning"

-

-

-

-

path => "/usr/local/nginx/logs/elk.access.log"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/mysql/mysql.slow.log"

-

-

start_position => "beginning"

-

-

pattern => "^# User@Host:"

-

-

-

-

-

-

-

-

-

-

match => { "message" => "SELECT SLEEP" }

-

add_tag => [ "sleep_drop" ]

-

-

-

-

-

if "sleep_drop" in [tags] {

-

-

-

-

-

match => { "message" => "(?m)^# User@Host: %{USER:User}\[[^\]]+\] @ (?:(?<clienthost>\S*) )?\[(?:%{IP:Client_IP})?\]\s.*# Query_time: %{NUMBER:Query_Time:float}\s+Lock_time: %{NUMBER:Lock_Time:float}\s+Rows_sent: %{NUMBER:Rows_Sent:int}\s+Rows_examined: %{NUMBER:Rows_Examined:int}\s*(?:use %{DATA:Database};\s*)?SET timestamp=%{NUMBER:timestamp};\s*(?<Query>(?<Action>\w+)\s+.*)\n# Time:.*$" }

-

-

-

-

match => [ "timestamp", "UNIX" ]

-

remove_field => [ "timestamp" ]

-

-

-

-

-

-

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-system-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-secure-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-http-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-nginx-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-mysql-slow-%{+YYYY.MM.dd}"

-

-

-

查看效果(一条慢日志查询会显示一条,如果不进行正则匹配,那么一行就会显示一条)

具体的日志输出需求,进行具体的分析

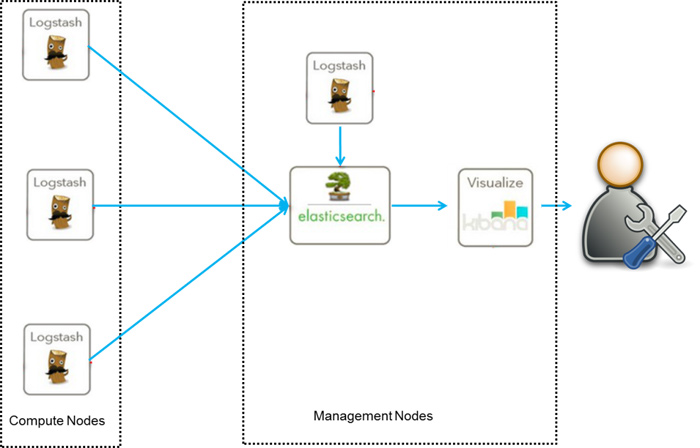

三:ELK终极篇

-

-

-

-

-

-

-

-

-

-

-

-

-

# /etc/init.d/redis restart

-

-

-

# redis-cli -h 192.168.1.202

-

-

-

redis 192.168.1.202:6379> info

-

-

-

-

-

编辑配置redis-out.conf配置文件,把标准输入的数据存储到redis中

-

# vim /etc/logstash/conf.d/redis-out.conf

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

运行logstash指定redis-out.conf的配置文件

-

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-out.conf

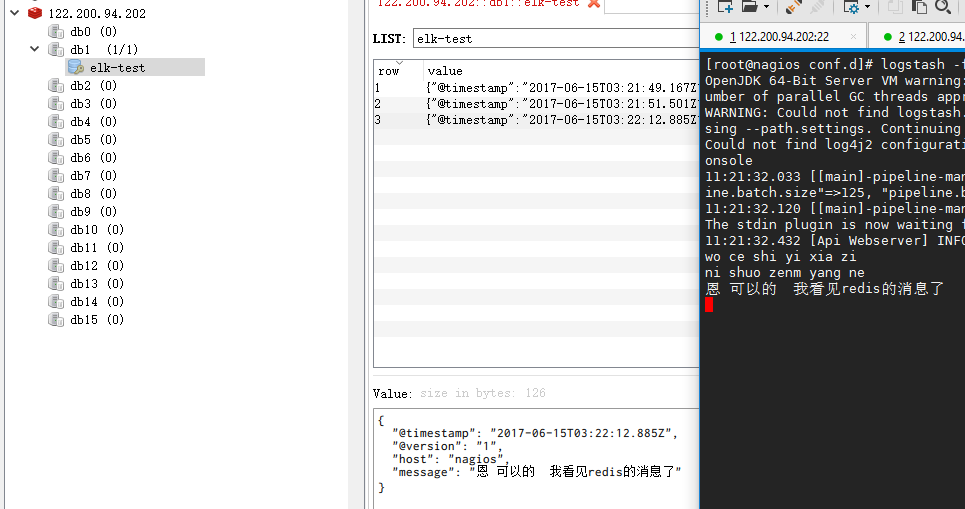

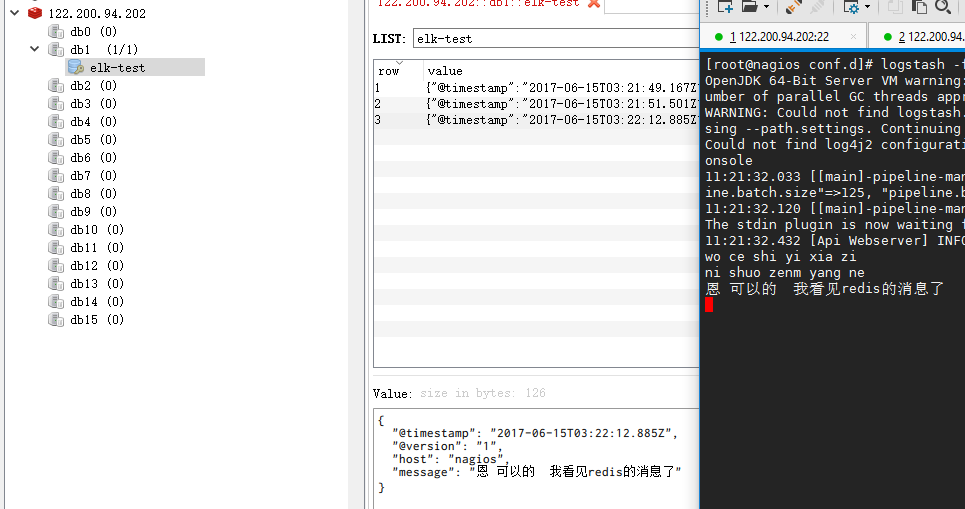

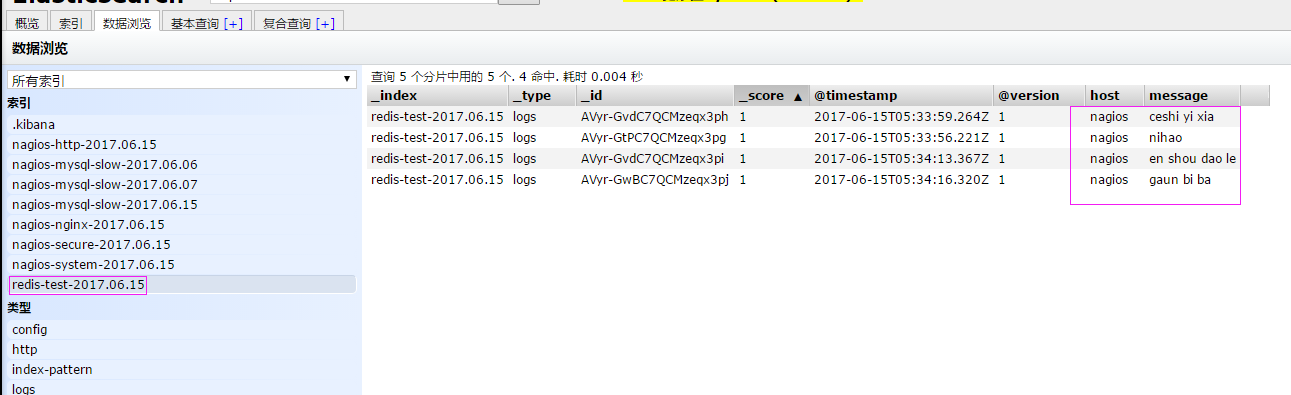

运行成功以后,在logstash中输入内容(查看下效果)

-

编辑配置redis-in.conf配置文件,把reids的存储的数据输出到elasticsearch中

-

# vim /etc/logstash/conf.d/redis-out.conf

-

-

-

-

-

-

-

-

-

-

-

batch_count => 1 #这个值是指从队列中读取数据时,一次性取出多少条,默认125条(如果redis中没有125条,就会报错,所以在测试期间加上这个值)

-

-

-

-

-

-

-

hosts => ['192.168.1.202:9200']

-

index => 'redis-test-%{+YYYY.MM.dd}'

-

-

-

-

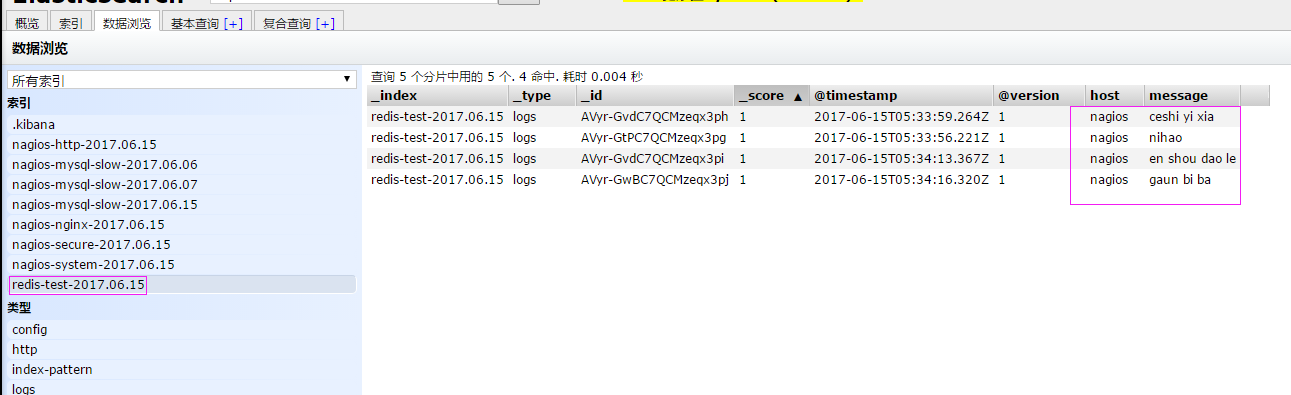

运行logstash指定redis-in.conf的配置文件

-

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-out.conf

-

把之前的配置文件修改一下,变成所有的日志监控的来源文件都存放到redis中,然后通过redis在输出到elasticsearch中

-

-

-

-

-

path => "/var/log/httpd/access_log"

-

-

start_position => "beginning"

-

-

-

-

path => "/usr/local/nginx/logs/elk.access.log"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/secure"

-

-

start_position => "beginning"

-

-

-

-

path => "/var/log/messages"

-

-

start_position => "beginning"

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

运行logstash指定shipper.conf的配置文件

-

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/full.conf

-

-

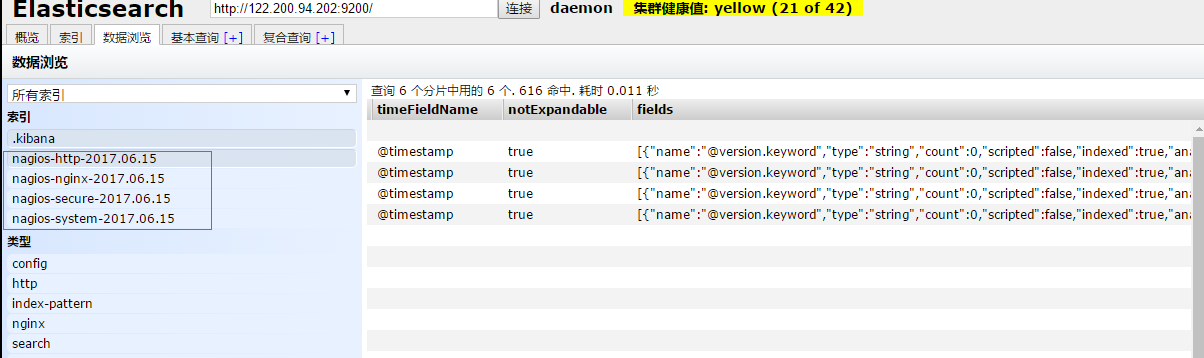

在redis中查看是否已经将数据写到里面(有时候输入的日志文件不产生日志,会导致redis里面也没有写入日志)

-

把redis中的数据读取出来,写入到elasticsearch中(需要另外一台主机做实验)

-

-

-

# vim /etc/logstash/conf.d/redis-out.conf

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-system-%{+YYYY.MM.dd}"

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-http-%{+YYYY.MM.dd}"

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-nginx-%{+YYYY.MM.dd}"

-

-

-

-

-

-

hosts => ["192.168.1.202:9200"]

-

index => "nagios-secure-%{+YYYY.MM.dd}"

-

-

-

-

-

-

-

-

output是同样也保存到192.168.1.202中的elasticsearch中,如果要保存到当前的主机上,可以把output中的hosts修改成localhost,如果还需要在kibana中显示,需要在本机上部署kabana,为何要这样做,起到一个松耦合的目的

-

说白了,就是在客户端收集日志,写到服务端的redis里或是本地的redis里面,输出的时候对接ES服务器即可

-

-

-

# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis-out.conf

效果是和直接往ES服务器输出一样的(这样是先将日志存到redis数据库,然后再从redis数据库里取出日志)

上线ELK

-

-

系统日志 rsyslog logstash syslog插件

-

访问日志 nginx logstash codec json

-

错误日志 file logstash mulitline

-

运行日志 file logstash codec json

-

设备日志 syslog logstash syslog插件

-

Debug日志 file logstash json 或者 mulitline

-

-

-

-

-

-

3. 系统个日志开始-->错误日志-->运行日志-->访问日志

因为ES保存日志是永久保存,所以需要定期删除一下日志,下面命令为删除指定时间前的日志

curl -X DELETE http://xx.xx.com:9200/logstash-*-`date +%Y-%m-%d -d "-$n days"`

(责任编辑:IT) |