1、方案说明目标是搭建企业级的高可用负载均衡集群服务。采用Keepalived + LVS + Tomcat + Memcache Session Manager + Memcached解决方案。其中:

2、环境准备4台虚拟机,每台512内存(小本太慢,内存不多啊)

3、Keepalived + LVS 服务端安装配置keepalived+LVS方案中,两台调度服务需要安排和配置Keepalived+LVS,并配置主备关系,实现负载均衡和高可用目标服务器:调度主和调度备,两台的安装配置基本完全相同,只是keepalive.conf的部分配置需要修改。 3.1、系统组件依赖# yum install gcc# yum install kernel-devel # yum install openssl-devel 3.2、LVS 安装配置

命令操作代码

# cd /tools # wget http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.24.tar.gz # ln -sv /usr/src/kernels/2.6.18-8.el5-i686/ /usr/src/linux # tar -zxvf ipvsadm-1.24.tar.gz # cd ipvsadm-1.24 # make;make install 以上操作完成后,LVS已经完整完成,安装后的可执行文件ipvsadm在/sbin/下。LVS安装完成后无需独立配置,后续统一由Keepalived进行托管配置和管理。 3.3、Keepalived 安装配置

命令操作代码

# cd /tools # wget http://www.keepalived.org/software/keepalived-1.1.17.tar.gz # tar -zxvf keepalived-1.1.17.tar.gz # cd keepalived-1.1.17 // 注意:因为前面设置了ln -sv /usr/src/kernels/2.6.18-8.el5-i686/ /usr/src/linux的软连接,所以这里没有使用 --with-kernel-dir参数,否则:./configure --with-kernel-dir=/usr/src/kernels/2.6.18-8.el5-i686, 确保Keepalived与内核结合。 # ./configure …… Keepalived configuration ------------------------ Keepalived version : 1.2.2 Compiler : gcc Compiler flags : -g -O2 Extra Lib : -lpopt -lssl -lcrypto Use IPVS Framework : Yes IPVS sync daemon support : Yes IPVS use libnl : No Use VRRP Framework : Yes Use Debug flags : No # make # make install // 拷贝keepalived的服务脚本到系统自启动目录(启动操作系统自启动) # cp /usr/local/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/ // 拷贝keepalived的启动参数到系统参数配置目录(实际就是:-D) # cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/ // 拷贝keepalived程序文件到系统程序目录 # cp /usr/local/sbin/keepalived /usr/sbin/ // 创建keepalived配置文件目录 # mkdir /etc/keepalived // 配置keepalived服务及启动 # chkconfig --add keepalived # chkconfig --level 2345 keepalived on创建和编辑keepalived配置文件:/etc/keepalived/keepalived.conf,配置和定义虚拟服务器(完整文件请参见附件)

/etc/keepalived/keepalived.conf代码

global_defs {

router_id KEEPALIVED_LVS

}

vrrp_sync_group KEEPALIVED_LVS {

group {

KEEPALIVED_LVS_WEB

}

}

vrrp_instance KEEPALIVED_LVS_WEB {

state MASTER //注意:如果Keepalived的备机,这里是SLAVE

interface eth0

lvs_sync_daemon_interface eth0

garp_master_delay 5

virtual_router_id 100

priority 150 //注意:这里是主备的权重,备一般权重低于主

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

10.10.10.10

}

}

virtual_server 10.10.10.10 80 {

delay_loop 3

lb_algo wrr //定义负载均衡算法,这里是权重轮训

lb_kind DR //定义模式,这里是Direct route

persistence_timeout 0 //会话保存时长(秒),0表示不使用stickyness会话

protocol TCP

// 后端服务器定义

real_server 10.10.10.13 80 {

weight 1 //权重

//HttpGET 方式验证真实服务有效性

HTTP_GET {

url {

//验证后端服务是否正常的访问地址

path /checkRealServerHealth.28055dab3fc0a85271dddbeb0464bfdb

//访问地址内容的 MD5 摘,通过对比摘要验证后端服务器是否可用

digest 26f11e326fc7c597355f213e5677ae75

}

connect_timeout 3 //连接超时时间

nb_get_retry 3 //重试次数

delay_before_retry 3 //每次重试前等待延迟时间

}

}

real_server 10.10.10.14 80 {

weight 1

HTTP_GET {

url {

path /checkRealServerHealth.28055dab3fc0a85271dddbeb0464bfdb

digest 26f11e326fc7c597355f213e5677ae75

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

注意:调度备机的配置与调度主完全相同,区别在于keepalived.conf配置文件中:state MASTER/SLAVE; priority 150/100 4、后端服务器安装配置4.1、LVS 客户端安装配置LVS-DR模式中,后端真实服务器(RealServer)无需安装相关软件,只需要对VIP进行绑定和路由设置等一系列操作,这里整理为一个脚本:lvs_realserver.sh,详细解释如下,完整文件请参见附件。

Lvs_realserver.sh代码

#!/bin/bash

# description: Config realserver

LVS_VIP=10.10.10.10

/etc/rc.d/init.d/functions

case "$1" in

start)

/sbin/ifconfig lo:0 $LVS_VIP netmask 255.255.255.255 broadcast $LVS_VIP

/sbin/route add -host $LVS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

/sbin/ifconfig lo:0 down

/sbin/route del $LVS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

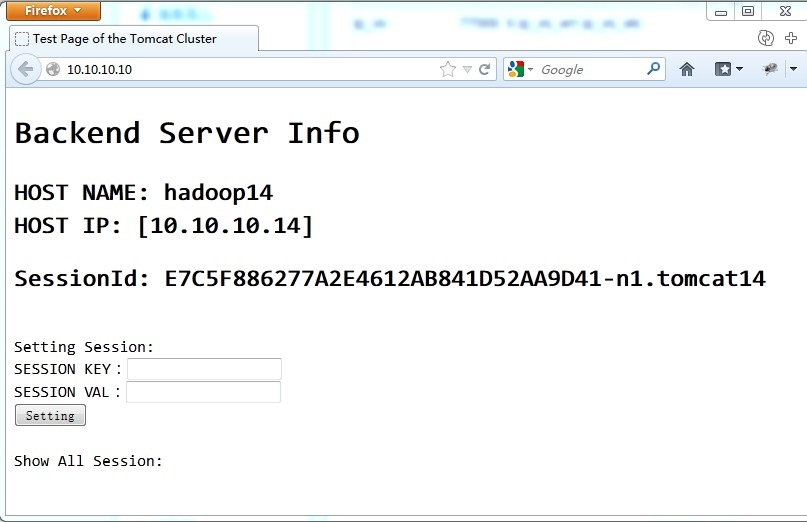

从附件下载该脚本,拷贝到后端真实服务器中,运行:./lvs_realserver.sh start|stop4.2、MSM-Tomcat安装和配置后端服务器采用Memcache Session manager + tomcat方式实现集群中会话的同步和复制。详细配置请参考:APACHE(proxy_ajp_stickysession) + TOMCAT(msm_sticky)实现HA

|