|

本文使用docker来部署ceph, 基于CentOS 7 x64.

首先要安装docker, CentOS 7源已经包含了docker, 所以可以直接使用yum安装.

# yum install -y docker

配置docker 运行时的root目录(-g 参数).

[root@localhost ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv01 vgdata01 -wi-ao---- 409.32g

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vgdata01-lv01 410G 474M 409G 1% /data01

[root@localhost ~]# mkdir /data01/docker

配置docker服务启动项, 与CentOS 6有所不同, 配置如下 :

[root@localhost ~]# vi /etc/sysconfig/docker

# /etc/sysconfig/docker

# Modify these options if you want to change the way the docker daemon runs

OPTIONS="--selinux-enabled=false -g /data01/docker"

启动docker server

[root@localhost ~]# systemctl start docker

[root@localhost ~]# ip link show docker0

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

[root@localhost ~]# ip addr show docker0

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever

[root@localhost ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.56847afe9799 no

# 下载centos base image

[root@localhost sysconfig]# docker pull centos

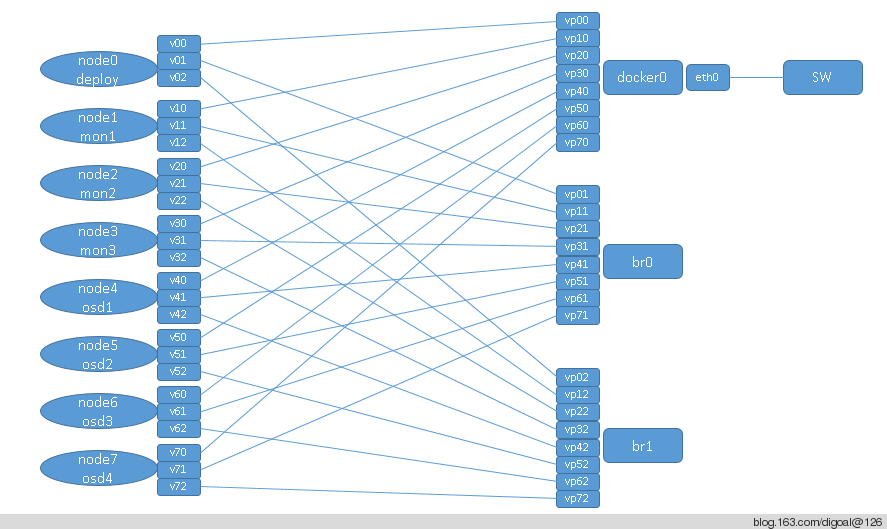

规划环境 :

IP分别为 :

docker0 172.17.42.1/16 br0 172.18.42.1/16 br1 172.19.42.1/16 deploy v00 172.17.0.1/16 v01 172.18.0.1/16 v02 172.19.0.1/16 mon1 v10 172.17.0.2/16 v11 172.18.0.2/16 v12 172.19.0.2/16 mon2 v20 172.17.0.3/16 v21 172.18.0.3/16 v22 172.19.0.3/16 mon3 v30 172.17.0.4/16 v31 172.18.0.4/16 v32 172.19.0.4/16 osd1 v40 172.17.0.5/16 v41 172.18.0.5/16 v42 172.19.0.5/16 osd2 v50 172.17.0.6/16 v51 172.18.0.6/16 v52 172.19.0.6/16 osd3 v60 172.17.0.7/16 v61 172.18.0.7/16 v62 172.19.0.7/16 osd4 v70 172.17.0.8/16 v71 172.18.0.8/16 v72 172.19.0.8/16

生产的部署, 网络建议参考, 将OSD的心跳, 复制, 对外和监控分成3张网来通讯.

(至少应该2张网络)

http://docs.ceph.com/docs/master/rados/configuration/network-config-ref/

网络配置建议使用pipework工具, 简化繁琐的配置过程(因本文配置过程较为繁琐).

参考 :

http://blog.163.com/digoal@126/blog/static/163877040201411602632460/

创建几个卷目录 :

[root@150 ~]# cd /data01/

[root@localhost data01]# mkdir deploy [root@localhost data01]# mkdir mon1 [root@localhost data01]# mkdir mon2 [root@localhost data01]# mkdir mon3 [root@localhost data01]# mkdir osd1 [root@localhost data01]# mkdir osd2 [root@localhost data01]# mkdir osd3 [root@localhost data01]# mkdir osd4

为了能够访问外网, 需要配置宿主机的nat规则配置 :

# iptables rules

# Enable NAT

iptables -t nat -A POSTROUTING -s 172.16.42.0/24 ! -d 172.16.42.0/24 -j MASQUERADE

# Accept incoming packets for existing connections

iptables -A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

# Accept all non-intercontainer outgoing packets

iptables -A FORWARD -i docker0 ! -o docker0 -j ACCEPT

# By default allow all outgoing traffic

iptables -A FORWARD -i docker0 -o docker0 -j ACCEPT

开启宿主机forward :

[root@localhost ~]# vi /etc/sysctl.conf

net.ipv4.ip_forward=1

[root@localhost ~]# sysctl -p

net.ipv4.ip_forward = 1

使用前面制作的sshd 镜像, 启动几个container.

http://blog.163.com/digoal@126/blog/static/1638770402014102711413675/

为了自定义IP, 这里不使用docker来配置网络, 而是使用netns来配置网络.

启动container(包括所有节点)

docker run -d --net=none --dns=202.101.172.35 --name=deploy --hostname=deploy --privileged=true -v /data01/deploy:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=mon1 --hostname=mon1 --privileged=true -v /data01/mon1:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=mon2 --hostname=mon2 --privileged=true -v /data01/mon2:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=mon3 --hostname=mon3 --privileged=true -v /data01/mon3:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=osd1 --hostname=osd1 --privileged=true -v /data01/osd1:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=osd2 --hostname=osd2 --privileged=true -v /data01/osd2:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=osd3 --hostname=osd3 --privileged=true -v /data01/osd3:/data01 digoal/sshd

docker run -d --net=none --dns=202.101.172.35 --name=osd4 --hostname=osd4 --privileged=true -v /data01/osd4:/data01 digoal/sshd

状态如下 :

[root@localhost data01]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 02d922ce295b digoal/sshd:latest "/usr/sbin/sshd -D" 5 seconds ago Up 5 seconds 22/tcp osd2 f6df72b9780f digoal/sshd:latest "/usr/sbin/sshd -D" 5 seconds ago Up 4 seconds 22/tcp osd3 4c098166cc5d digoal/sshd:latest "/usr/sbin/sshd -D" 5 seconds ago Up 4 seconds 22/tcp osd4 fa24ec5115f6 digoal/sshd:latest "/usr/sbin/sshd -D" 6 seconds ago Up 5 seconds 22/tcp mon1 59c41fc9560e digoal/sshd:latest "/usr/sbin/sshd -D" 6 seconds ago Up 6 seconds 22/tcp deploy 9c9391eb3e1a digoal/sshd:latest "/usr/sbin/sshd -D" 6 seconds ago Up 5 seconds 22/tcp mon3 34a7ceefcfd3 digoal/sshd:latest "/usr/sbin/sshd -D" 6 seconds ago Up 5 seconds 22/tcp osd1 5de0fae0d8bd digoal/sshd:latest "/usr/sbin/sshd -D" 6 seconds ago Up 5 seconds 22/tcp mon2

/*-------------------------------------------------------------------

使用pipework来配置更方便, 需要自行添加路由. 所以最好使用--privileged=true启动容器.

建议使用网桥, 不要使用 OVS, 因为加入OVS的接口peer消失后不会自动删除.

例如 :

./pipework.sh docker0 -i eth0 deploy 172.17.0.1/16

./pipework.sh br0 -i eth1 deploy 172.18.0.1/16

./pipework.sh br1 -i eth2 deploy 172.19.0.1/16

ssh 172.17.0.1

ip route add default via 172.17.42.1 dev eth0

pipework使用详见 :

http://blog.163.com/digoal@126/blog/static/163877040201411602632460/

获得这container的PID, 以此来配置网络.

[root@localhost data01]# docker inspect -f '{{.State.Pid}}' deploy 17299 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' mon1 17325 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' mon2 17351 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' mon3 17377 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' osd1 17403 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' osd2 17429 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' osd3 17456 [root@localhost data01]# docker inspect -f '{{.State.Pid}}' osd4 17485

添加peer接口 :

ip link add v00 type veth peer name vp00 ip link add v10 type veth peer name vp10 ip link add v20 type veth peer name vp20 ip link add v30 type veth peer name vp30 ip link add v40 type veth peer name vp40 ip link add v50 type veth peer name vp50 ip link add v60 type veth peer name vp60 ip link add v70 type veth peer name vp70 ip link add v01 type veth peer name vp01 ip link add v11 type veth peer name vp11 ip link add v21 type veth peer name vp21 ip link add v31 type veth peer name vp31 ip link add v41 type veth peer name vp41 ip link add v51 type veth peer name vp51 ip link add v61 type veth peer name vp61 ip link add v71 type veth peer name vp71 ip link add v02 type veth peer name vp02 ip link add v12 type veth peer name vp12 ip link add v22 type veth peer name vp22 ip link add v32 type veth peer name vp32 ip link add v42 type veth peer name vp42 ip link add v52 type veth peer name vp52 ip link add v62 type veth peer name vp62 ip link add v72 type veth peer name vp72

配置container的netns :

mkdir -p /var/run/netns rm -rf /var/run/netns/17299 rm -rf /var/run/netns/17325 rm -rf /var/run/netns/17351 rm -rf /var/run/netns/17377 rm -rf /var/run/netns/17403 rm -rf /var/run/netns/17429 rm -rf /var/run/netns/17456 rm -rf /var/run/netns/17485 ln -s /proc/17299/ns/net /var/run/netns/17299 ln -s /proc/17325/ns/net /var/run/netns/17325 ln -s /proc/17351/ns/net /var/run/netns/17351 ln -s /proc/17377/ns/net /var/run/netns/17377 ln -s /proc/17403/ns/net /var/run/netns/17403 ln -s /proc/17429/ns/net /var/run/netns/17429 ln -s /proc/17456/ns/net /var/run/netns/17456 ln -s /proc/17485/ns/net /var/run/netns/17485

将peer链路的一个端口分别指派给4个container.

ip link set v00 netns 17299 ip link set v01 netns 17299 ip link set v02 netns 17299 ip link set v10 netns 17325 ip link set v11 netns 17325 ip link set v12 netns 17325 ip link set v20 netns 17351 ip link set v21 netns 17351 ip link set v22 netns 17351 ip link set v30 netns 17377 ip link set v31 netns 17377 ip link set v32 netns 17377 ip link set v40 netns 17403 ip link set v41 netns 17403 ip link set v42 netns 17403 ip link set v50 netns 17429 ip link set v51 netns 17429 ip link set v52 netns 17429 ip link set v60 netns 17456 ip link set v61 netns 17456 ip link set v62 netns 17456 ip link set v70 netns 17485 ip link set v71 netns 17485 ip link set v72 netns 17485

使用ip netns配置container的IP地址, 并添加默认路由, 指向docker server bridge :

ip netns exec 17299 ip link set lo up ip netns exec 17299 ip link set v00 up ip netns exec 17299 ip link set v01 up ip netns exec 17299 ip link set v02 up ip netns exec 17299 ip addr add 172.17.0.1/16 dev v00 ip netns exec 17299 ip addr add 172.18.0.1/16 dev v01 ip netns exec 17299 ip addr add 172.19.0.1/16 dev v02 ip netns exec 17299 ip route add default via 172.17.42.1 dev v00 ip netns exec 17325 ip link set lo up ip netns exec 17325 ip link set v10 up ip netns exec 17325 ip link set v11 up ip netns exec 17325 ip link set v12 up ip netns exec 17325 ip addr add 172.17.0.2/16 dev v10 ip netns exec 17325 ip addr add 172.18.0.2/16 dev v11 ip netns exec 17325 ip addr add 172.19.0.2/16 dev v12 ip netns exec 17325 ip route add default via 172.17.42.1 dev v10 ip netns exec 17351 ip link set lo up ip netns exec 17351 ip link set v20 up ip netns exec 17351 ip link set v21 up ip netns exec 17351 ip link set v22 up ip netns exec 17351 ip addr add 172.17.0.3/16 dev v20 ip netns exec 17351 ip addr add 172.18.0.3/16 dev v21 ip netns exec 17351 ip addr add 172.19.0.3/16 dev v22 ip netns exec 17351 ip route add default via 172.17.42.1 dev v20 ip netns exec 17377 ip link set lo up ip netns exec 17377 ip link set v30 up ip netns exec 17377 ip link set v31 up ip netns exec 17377 ip link set v32 up ip netns exec 17377 ip addr add 172.17.0.4/16 dev v30 ip netns exec 17377 ip addr add 172.18.0.4/16 dev v31 ip netns exec 17377 ip addr add 172.19.0.4/16 dev v32 ip netns exec 17377 ip route add default via 172.17.42.1 dev v30 ip netns exec 17403 ip link set lo up ip netns exec 17403 ip link set v40 up ip netns exec 17403 ip link set v41 up ip netns exec 17403 ip link set v42 up ip netns exec 17403 ip addr add 172.17.0.5/16 dev v40 ip netns exec 17403 ip addr add 172.18.0.5/16 dev v41 ip netns exec 17403 ip addr add 172.19.0.5/16 dev v42 ip netns exec 17403 ip route add default via 172.17.42.1 dev v40 ip netns exec 17429 ip link set lo up ip netns exec 17429 ip link set v50 up ip netns exec 17429 ip link set v51 up ip netns exec 17429 ip link set v52 up ip netns exec 17429 ip addr add 172.17.0.6/16 dev v50 ip netns exec 17429 ip addr add 172.18.0.6/16 dev v51 ip netns exec 17429 ip addr add 172.19.0.6/16 dev v52 ip netns exec 17429 ip route add default via 172.17.42.1 dev v50 ip netns exec 17456 ip link set lo up ip netns exec 17456 ip link set v60 up ip netns exec 17456 ip link set v61 up ip netns exec 17456 ip link set v62 up ip netns exec 17456 ip addr add 172.17.0.7/16 dev v60 ip netns exec 17456 ip addr add 172.18.0.7/16 dev v61 ip netns exec 17456 ip addr add 172.19.0.7/16 dev v62 ip netns exec 17456 ip route add default via 172.17.42.1 dev v60 ip netns exec 17485 ip link set lo up ip netns exec 17485 ip link set v70 up ip netns exec 17485 ip link set v71 up ip netns exec 17485 ip link set v72 up ip netns exec 17485 ip addr add 172.17.0.8/16 dev v70 ip netns exec 17485 ip addr add 172.18.0.8/16 dev v71 ip netns exec 17485 ip addr add 172.19.0.8/16 dev v72 ip netns exec 17485 ip route add default via 172.17.42.1 dev v70

docker server 网桥地址

[root@localhost ~]# ip addr show docker0

6: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::5484:7aff:fefe:9799/64 scope link

valid_lft forever preferred_lft forever

添加2个网桥 :

[root@localhost netns]# brctl addbr br0

[root@localhost netns]# brctl addbr br1

[root@localhost netns]# ip link set br0 up

[root@localhost netns]# ip link set br1 up

[root@localhost netns]# ip addr add 172.18.42.1/16 dev br0

[root@localhost netns]# ip addr add 172.19.42.1/16 dev br1

将peer链路的另一个端口加入docker server对应的网桥, br0以及br1, 并启动接口.

brctl addif docker0 vp00 brctl addif docker0 vp10 brctl addif docker0 vp20 brctl addif docker0 vp30 brctl addif docker0 vp40 brctl addif docker0 vp50 brctl addif docker0 vp60 brctl addif docker0 vp70

brctl addif br0 vp01 brctl addif br0 vp11 brctl addif br0 vp21 brctl addif br0 vp31 brctl addif br0 vp41 brctl addif br0 vp51 brctl addif br0 vp61 brctl addif br0 vp71 brctl addif br1 vp02 brctl addif br1 vp12 brctl addif br1 vp22 brctl addif br1 vp32 brctl addif br1 vp42 brctl addif br1 vp52 brctl addif br1 vp62 brctl addif br1 vp72

ip link set vp00 up ip link set vp10 up ip link set vp20 up ip link set vp30 up ip link set vp40 up ip link set vp50 up ip link set vp60 up ip link set vp70 up ip link set vp01 up ip link set vp11 up ip link set vp21 up ip link set vp31 up ip link set vp41 up ip link set vp51 up ip link set vp61 up ip link set vp71 up ip link set vp02 up ip link set vp12 up ip link set vp22 up ip link set vp32 up ip link set vp42 up ip link set vp52 up ip link set vp62 up ip link set vp72 up

[root@localhost netns]# brctl show bridge name bridge id STP enabled interfaces br0 8000.0a26f690e66f no vp01 vp11 vp21 vp31 vp41 vp51 vp61 vp71 br1 8000.42bddaccbbc9 no vp02 vp12 vp22 vp32 vp42 vp52 vp62 vp72 docker0 8000.56847afe9799 no vp00 vp10 vp20 vp30 vp40 vp50 vp60 vp70

--------------------------------------------------------------------------------------------- */

测试网络连通性 :

连接到deploy节点 :

[root@localhost ~]# ssh 172.17.0.1

The authenticity of host '172.17.0.1 (172.17.0.1)' can't be established.

ECDSA key fingerprint is db:5c:6b:2a:bc:9e:3e:31:24:1b:c0:8d:5f:96:f2:e0.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.17.0.1' (ECDSA) to the list of known hosts.

root@172.17.0.1's password: 密码Digoal_sshd_1999

[root@65a05647c55d ~]# ping www.baidu.com

PING www.a.shifen.com (115.239.211.110) 56(84) bytes of data.

64 bytes from 115.239.211.110: icmp_seq=1 ttl=55 time=2.67 ms

[root@65a05647c55d ~]# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

64: v1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000

link/ether 4a:e5:cd:99:f5:c8 brd ff:ff:ff:ff:ff:ff

[root@65a05647c55d ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

64: v1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 4a:e5:cd:99:f5:c8 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global v1

valid_lft forever preferred_lft forever

inet6 fe80::48e5:cdff:fe99:f5c8/64 scope link

valid_lft forever preferred_lft forever

开始部署ceph :

首先查看ceph安装环境的需求 :

http://docs.ceph.com/docs/master/start/os-recommendations/

1. kernel版本, centos 7 的 内核版本3.10.x 符合需求.

2. 文件系统, centos 7 默认使用xfs文件系统, 符合要求.

(在所有的docker container中配置yum 仓库)

使用yum 安装, 首先要配置yum仓库, 参考如下 :

REQUIREMENTS?All Ceph deployments require Ceph packages (except for development). You should also add keys and recommended packages.

If you intend to download packages manually, see Section Download Packages.

配置yum 仓库的步骤 :

详细参考 :

http://ceph.com/docs/master/install/get-packages/

注: 使用rhel6或centos6的话, yum仓库配置文件中使用el6替换el7.

1. 添加 KEY

[root@150 ~]# rpm --import 'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc'

2. 添加 ceph extras 源

[root@150 ~]# cd /etc/yum.repos.d/

[root@150 yum.repos.d]# uname -r

3.10.0-123.el7.x86_64

使用el7.

(目前ceph extras没有el的源, 只能添加同内核版本的fedora, 如centos7对应fedora19, 以后如果有了el源, 可以使用el7代替fedora19)

[root@150 yum.repos.d]# vi ceph-extras.repo

[ceph-extras]

name=Ceph Extras Packages

baseurl=http://ceph.com/packages/ceph-extras/rpm/fedora19/$basearch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

[ceph-extras-noarch]

name=Ceph Extras noarch

baseurl=http://ceph.com/packages/ceph-extras/rpm/fedora19/noarch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

[ceph-extras-source]

name=Ceph Extras Sources

baseurl=http://ceph.com/packages/ceph-extras/rpm/fedora19/SRPMS

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

3. 添加 ceph源, 使用最新的stable release版本, giant, 如果以后出了其他版本, 可以使用其他版本名代替giant.

[root@db-172-16-3-221 yum.repos.d]# vi ceph.repo

[ceph]

name=Ceph packages for $basearch

baseurl=http://ceph.com/rpm-giant/el7/$basearch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://ceph.com/rpm-giant/el7/noarch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://ceph.com/rpm-giant/el7/SRPMS

enabled=0

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/release.asc

现有ceph的版本信息如下, 最新版本为G开头的Giant.

The major releases of Ceph include:

4. 如果不使用对象存储服务, 可以不添加以下源.

[root@db-172-16-3-221 yum.repos.d]# vi ceph-apache.repo

[apache2-ceph-noarch]

name=Apache noarch packages for Ceph

baseurl=http://gitbuilder.ceph.com/apache2-rpm-fedora19-x86_64-basic/ref/master

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc

[apache2-ceph-source]

name=Apache source packages for Ceph

baseurl=http://gitbuilder.ceph.com/apache2-rpm-fedora19-x86_64-basic/ref/master

enabled=0

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc

[fastcgi-ceph-basearch]

name=FastCGI basearch packages for Ceph

baseurl=http://gitbuilder.ceph.com/mod_fastcgi-rpm-fedora19-x86_64-basic/ref/master

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc

[fastcgi-ceph-noarch]

name=FastCGI noarch packages for Ceph

baseurl=http://gitbuilder.ceph.com/mod_fastcgi-rpm-fedora19-x86_64-basic/ref/master

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc

[fastcgi-ceph-source]

name=FastCGI source packages for Ceph

baseurl=http://gitbuilder.ceph.com/mod_fastcgi-rpm-fedora19-x86_64-basic/ref/master

enabled=0

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc

检查yum配置是否正确

[root@150 yum.repos.d]# yum search all ceph

========================================================== Matched: ceph ===========================================================

ceph.x86_64 : User space components of the Ceph file system

ceph-common.x86_64 : Ceph Common

ceph-debuginfo.x86_64 : Debug information for package ceph

ceph-deploy.noarch : Admin and deploy tool for Ceph

ceph-devel.x86_64 : Ceph headers

ceph-fuse.x86_64 : Ceph fuse-based client

ceph-libs-compat.x86_64 : Meta package to include ceph libraries.

ceph-release.noarch : Ceph repository configuration

ceph-test.x86_64 : Ceph benchmarks and test tools

cephfs-java.x86_64 : Java libraries for the Ceph File System.

libcephfs1.x86_64 : Ceph distributed file system client library

libcephfs_jni1.x86_64 : Java Native Interface library for CephFS Java bindings.

python-ceph.x86_64 : Python libraries for the Ceph distributed filesystem

rbd-fuse.x86_64 : Ceph fuse-based client

ceph-radosgw.x86_64 : Rados REST gateway

librados2.x86_64 : RADOS distributed object store client library

librbd1.x86_64 : RADOS block device client library

libradosstriper1.x86_64 : RADOS striping interface

radosgw-agent.noarch : Synchronize users and data between radosgw clusters

rest-bench.x86_64 : RESTful benchmark

最后, 需要配置所有节点的内核参数 :

建议参数 :

/etc/sysctl.conf

kernel.pid_max=4194303

kernel.shmmni = 4096

kernel.sem = 50100 64128000 50100 1280

fs.file-max = 7672460

net.ipv4.ip_local_port_range = 9000 65000

net.core.rmem_default = 1048576

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_max_syn_backlog = 4096

net.core.netdev_max_backlog = 10000

/etc/security/limits.conf

* soft nofile 131072

* hard nofile 131072

* soft nproc 131072

* hard nproc 131072

* soft core unlimited

* hard core unlimited

* soft memlock 50000000

* hard memlock 50000000

/etc/security/limits.d/90-nproc.conf

* soft nproc 131072

* hard nproc 131072

1. http://docs.ceph.com/docs/master/start/quick-start-preflight/

2. http://docs.ceph.com/docs/master/start/quick-ceph-deploy/

3. http://ceph.com/resources/downloads/

4. http://ceph.com/docs/master/release-notes/

5. http://ceph.com/docs/master/start/

6. http://ceph.com/docs/master/install/get-packages/

7. http://ceph.com/docs/master/glossary/

|