Centos 中的RAID详解

时间:2014-02-21 13:24 来源:51cto博客 作者:陈明乾

-

外接式磁盘阵列柜:最常被使用大型服务器上,具可热抽换(Hot Swap)的特性,不过这类产品的价格都很贵。

-

内接式磁盘阵列卡:因为价格便宜,但需要较高的安装技术,适合技术人员使用操作。

-

利用软件来仿真:由于会拖累机器的速度,不适合大数据流量的服务器。

RAID Level

性能提升

冗余能力

空间利用率

磁盘数量(块)

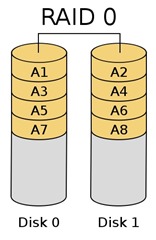

RAID 0

读、写提升

无

100%

至少2

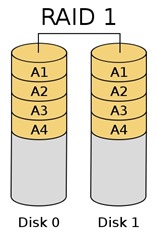

RAID 1

读性能提升,写性能下降

有

50%

至少2

RAID 5

读、写提升

有

(n-1)/n%

至少3

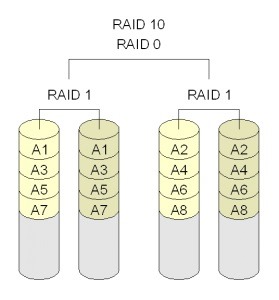

RAID 1+0

读、写提升

有

50%

至少4

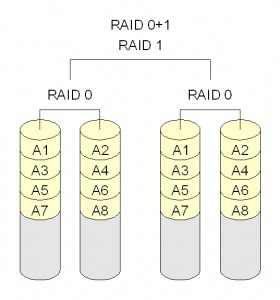

RAID 0+1

读、写提升

有

50%

至少4

RAID 5+0

读、写提升

有

(n-2)/n%

至少6

1

2

3

4

5

6

7

8

9

10

[root@localhost ~]# uname -r

2.6.18-194.el5

[root@localhost ~]# lsb_release -a

LSB Version: :core-3.1-amd64:core-3.1-ia32:core-3.1-noarch:graphics-3.1-amd64:graphics-3.1-ia32:graphics-3.1-noarch

Distributor ID: CentOS

Description: CentOS release 5.5 (Final)

Release: 5.5

Codename: Final

[root@localhost ~]# rpm -qa | grep mdadm

mdadm-2.6.9-3.el5

1

mdadm [mode] <raid-device> [options] <component-devices>

-

Assemble:装配模式:加入一个以前定义的阵列,可以正在使用阵列或从其他主机移出的阵列

-

Build: 创建:创建一个没有超级块的阵列

-

Create: 创建一个新的阵列,每个设备具有超级块

-

Follow or Monitor: 监控RAID的状态,一般只对RAID-1/4/5/6/10等有冗余功能的模式来使用

-

Grow:(Grow or shrink) 改变RAID的容量或阵列中的设备数目;收缩一般指的是数据收缩或重建

-

Manage: 管理阵列(如添加spare盘和删除故障盘)

-

Incremental Assembly:添加一个设备到一个适当的阵列

-

Misc: 允许单独对阵列中的某个设备进行操作(如抹去superblocks 或停止阵列)

-

Auto-detect: 此模式不作用于特定的设备或阵列,而是要求在Linux内核启动任何自动检测到的阵列

1

2

3

4

5

6

7

-A, --assemble: 加入并开启一个以前定义的阵列

-B, --build: 创建一个没有超级块的阵列(Build a legacy array without superblocks)

-C, --create: 创建一个新的阵列

-F, --follow, --monitor:选择监控(Monitor)模式

-G, --grow: 改变激活阵列的大小或形态

-I, --incremental: 添加一个单独的设备到合适的阵列,并可能启动阵列

--auto-detect: 请求内核启动任何自动检测到的阵列

1

2

3

4

5

6

7

-C --create: 创建一个新的阵列

专用选项:

-l: 级别

-n #: 设备个数

-a {yes|no}: 是否自动为其创建设备文件

-c: CHUNK大小, 2^n,默认为64K

-x #: 指定空闲盘个数

1

2

3

-a --add: 添加列出的设备到一个工作的阵列中;当阵列处于降级状态(故障状态),你添加一个设备,该设备将作为备用设备并且在该备用设备上开始数据重建

-f --fail:将列出的设备标记为faulty状态,标记后就可以移除设备;(可以作为故障恢复的测试手段)

-r --remove:从阵列中移除列出的设备,并且该设备不能处于活动状态(是冗余盘或故障盘)

1

2

3

4

5

-F --follow, --monitor:选择监控(Monitor)模式

-m --mail: 设置一个mail地址,在报警时给该mail发信;该地址可写入conf文件,在启动阵列是生效

-p --program, --alert:当检测到一个事件时运行一个指定的程序

-y --syslog: 设置所有的事件记录于syslog中

-t --test: 给启动时发现的每个阵列生成test警告信息;该信息传递给mail或报警程序;(以此来测试报警信息是否能正确接收)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

-G --grow: 改变激活阵列的大小或形态

-n --raid-devices=: 指定阵列中活动的device数目,不包括spare磁盘,这个数目只能由--grow修改

-x --spare-devices=:指定初始阵列的冗余device 数目即spare device数目

-c --chunk=: Specify chunk size of kibibytes. 缺省为 64. chunk-size是一个重要的参数,决定了一次向阵列中每个磁盘写入数据的量

(Chunk :,可以理解为raid分储数据时每个数据段的大小(通常为32/64/128等这类数字大小);合理的选择chunk大小非常重要,若chunk过大可能一块磁盘上的带区空间就可以满足大部分的I/O操作,使得数据的读写只局限于一块硬盘上,这便不能充分发挥RAID并发的优势;如果chunk设置过小,任何很小的I/O指令都 可能引发大量的读写操作,不能良好发挥并发性能,占用过多的控制器总线带宽,也影响了阵列的整体性能。所以,在创建带区时,我们应该根据实际应用的需要,合理的选择带区大小。)

-z --size=:组建RAID1/4/5/6后从每个device获取的空间总数;但是大小必须为chunk的倍数,还需要在每个设备最后给RAID的superblock留至少128KB的大小。

--rounding=: Specify rounding factor forlinear array (==chunk size)

-l --level=: 设定 raid level.raid的几倍

--create: 可用:linear, raid0, 0, stripe, raid1,1, mirror, raid4, 4, raid5, 5, raid6, 6, multipath, mp.

--build: 可用:linear, raid0, 0, stripe.

-p --layout=:设定raid5 和raid10的奇偶校验规则;并且控制故障的故障模式;其中RAID-5的奇偶校验可以在设置为::eft-asymmetric, left-symmetric, right-asymmetric, right-symmetric, la, ra, ls, rs.缺省为left-symmetric

--parity: 类似于--layout=

--assume-clean:目前仅用于 --build 选项

-R --run: 阵列中的某一部分出现在其他阵列或文件系统中时,mdadm会确认该阵列。此选项将不作确认。

-f --force: 通常mdadm不允许只用一个device 创建阵列,而且此时创建raid5时会使用一个device作为missing drive。此选项正相反

-N --name=: 设定阵列的名称

1

-A, --assemble: 加入并开启一个以前定义的阵列

1

2

3

-Q, --query: 查看一个device,判断它为一个 md device 或是 一个 md 阵列的一部分

-D, --detail: 打印一个或多个md device 的详细信息

-E, --examine:打印 device 上的 md superblock 的内容

1

2

mdadm -D /dev/md#

--detail 停止阵列

1

2

mdadm -S /dev/md#

--stop

1

2

mdadm –A /dev/md#

--start

1

2

3

4

5

6

7

8

-c, --config=: 指定配置文件,缺省为 /etc/mdadm.conf

-s, --scan: 扫描配置文件或 /proc/mdstat以搜寻丢失的信息。默认配置文件:/etc/mdadm.conf

-h, --help: 帮助信息,用在以上选项后,则显示该选项信息

-v, --verbose: 显示细节,一般只能跟 --detile 或 --examine一起使用,显示中级的信息

-b, --brief: 较少的细节。用于 --detail 和 --examine 选项

--help-options: 显示更详细的帮助

-V, --version: 版本信息

-q,--quit: 安静模式;加上该选项能使mdadm不显示纯消息性的信息,除非那是一个重要的报告

创建一个RAID 0设备:

1

mdadm --create /dev/md0--level=0 --chunk=32 --raid-devices=3 /dev/sd[b-d]

创建一个RAID 1设备:

1

mdadm -C /dev/md0-l1 -c128 -n2 -x1 /dev/sd[b-d]

创建一个RAID 5设备:

1

mdadm -C /dev/md0-l5 -n5 /dev/sd[c-g] -x1 /dev/sdb

创建一个RAID 6设备:

1

mdadm -C /dev/md0-l6 -n5 /dev/sd[c-g] -x2 /dev/sdb/dev/sdh

创建一个RAID 10设备:

1

mdadm -C /dev/md0-l10 -n6 /dev/sd[b-g] -x1 /dev/sdh

创建一个RAID1+0设备(双层架构):

1

2

3

4

mdadm -C /dev/md0-l1 -n2 /dev/sdb/dev/sdc

mdadm -C /dev/md1-l1 -n2 /dev/sdd/dev/sde

mdadm -C /dev/md2-l1 -n2 /dev/sdf/dev/sdg

mdadm -C /dev/md3-l0 -n3 /dev/md0/dev/md1/dev/md2

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

[root@localhost ~]# fdisk /dev/sde

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain inmemory only,

untilyou decide to write them. After that, of course, the previous

content won't be recoverable.

The number of cylinders forthis disk is setto 2610.

There is nothing wrong with that, but this is larger than 1024,

and could incertain setups cause problems with:

1) software that runs at boot time(e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2FDISK)

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

Command (m forhelp): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-2610, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-2610, default 2610):

Using default value 2610

Command (m forhelp): t

Selected partition 1

Hex code (typeL to list codes): fd

Changed system typeof partition 1 to fd (Linux raid autodetect)

Command (m forhelp): w

The partition table has been altered!

Calling ioctl() to re-readpartition table.

这里只举个例子,其它类似!特别说明:在fdisk分区后需要将分区标志改为Linux raid auto类型;

效果如下:

[root@localhost ~]# fdisk -l | grep /dev/sd

Disk /dev/sdb: 21.4 GB, 21474836480 bytes

/dev/sdb11 2610 20964793+ fd Linux raid autodetect

Disk /dev/sdc: 21.4 GB, 21474836480 bytes

/dev/sdc11 2610 20964793+ fd Linux raid autodetect

Disk /dev/sdd: 21.4 GB, 21474836480 bytes

/dev/sdd11 2610 20964793+ fd Linux raid autodetect

Disk /dev/sde: 21.4 GB, 21474836480 bytes

/dev/sde11 2610 20964793+ fd Linux raid autodetect

[root@localhost ~]#

1

2

3

4

5

6

[root@localhost ~]# mdadm -C /dev/md0 -a yes -l 5 -n 3 /dev/sd{b,c,d}1

mdadm: array /dev/md0started.

#-C:创建一个阵列,后跟阵列名称

#-a : 表示自动创建

#-l : 指定阵列级别

#-n : 指定阵列中活动devices的数目

1

2

3

4

5

6

7

8

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdd1[2] sdc1[1] sdb1[0] #第一行

41929344 blocks level 5, 64k chunk, algorithm 2 [3/3] [UUU] #第二行

unused devices: <none>

[root@localhost ~]#

#第一行是MD设备名称md0,active和inactive选项表示阵列是否能读/写,接着是阵列的RAID级别raid5,后面是属于阵列的块设备,方括号[]里的数字表示设备在阵列中的序号,(S)表示其是热备盘,(F)表示这个磁盘是 faulty状态。

#第二行是阵列的大小,用块数来表示;后面有chunk-size的大小,然后是layout类型,不同RAID级别的 layout类型不同,[3/3] [UUU]表示阵列有3个磁盘并且3个磁盘都是正常运行的,若是[2/3]和[_UU] 表示阵列有3个磁盘中2个是正常运行的,下划线对应的那个位置的磁盘是faulty(错误)状态的。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

[root@localhost ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 3

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 17:34:08 2013

State : clean

Active Devices : 3 #活动的设备

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K #数据块大小

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.2

Number Major Minor RaidDevice State

0 8 17 0 active sync/dev/sdb1

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

[root@localhost ~]#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

[root@localhost ~]# mkfs -t ext3 -b 4096 -L myraid5 /dev/md0

mke2fs 1.39 (29-May-2006)

Filesystem label=myraid5

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

5242880 inodes, 10482336 blocks

524116 blocks (5.00%) reserved forthe super user

First data block=0

Maximum filesystem blocks=4294967296

320 block groups

32768 blocks per group, 32768 fragments per group

16384 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 28 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

You have new mail in/var/spool/mail/root

[root@localhost ~]#

# –t 指定文件系统类型

# –b 表示块大小有三种类型分别为 1024/2048/4096

# –L 指定卷标

1

2

3

4

5

6

7

8

9

10

11

12

13

[root@localhost ~]# mkdir /myraid5

[root@localhost ~]# mount /dev/md0 /myraid5/

[root@localhost ~]# cd /myraid5/

[root@localhost myraid5]# ls

lost+found

[root@localhost myraid5]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda29.5G 1.8G 7.2G 21% /

/dev/sda34.8G 138M 4.4G 4% /data

/dev/sda1251M 17M 222M 7% /boot

tmpfs 60M 0 60M 0% /dev/shm

/dev/md040G 177M 38G 1% /myraid5#新分区哦!

[root@localhost myraid5]#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

[root@localhost myraid5]# vim /etc/fstab

LABEL=/ / ext3 defaults 1 1

LABEL=/data/dataext3 defaults 1 2

LABEL=/boot/bootext3 defaults 1 2

tmpfs /dev/shmtmpfs defaults 0 0

devpts /dev/ptsdevpts gid=5,mode=620 0 0

sysfs /syssysfs defaults 0 0

proc /procproc defaults 0 0

LABEL=SWAP-sda5 swap swap defaults 0 0

/dev/md0/myraid5ext3 defaults 0 0

[root@localhost myraid5]# mount -a

[root@localhost myraid5]# mount

/dev/sda2on / typeext3 (rw)

proc on /proctypeproc (rw)

sysfs on /systypesysfs (rw)

devpts on /dev/ptstypedevpts (rw,gid=5,mode=620)

/dev/sda3on /datatypeext3 (rw)

/dev/sda1on /boottypeext3 (rw)

tmpfs on /dev/shmtypetmpfs (rw)

none on /proc/sys/fs/binfmt_misctypebinfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefstyperpc_pipefs (rw)

/dev/md0on /myraid5typeext3 (rw)

[root@localhost myraid5]#

1

2

3

4

5

6

7

8

9

[root@localhost ~]# echo DEVICE /dev/sd[b-h] /dev/sd[i-k]1 > /etc/mdadm.conf

[root@localhost ~]# mdadm -Ds >>/etc/mdadm.conf

[root@localhost ~]# cat /etc/mdadm.conf

DEVICE /dev/sdb/dev/sdc/dev/sdd/dev/sde/dev/sdf/dev/sdg/dev/sdh

/dev/sdi1/dev/sdj1/dev/sdk1

ARRAY /dev/md1level=raid0 num-devices=3

UUID=dcff6ec9:53c4c668:58b81af9:ef71989d

ARRAY /dev/md0level=raid10 num-devices=6 spares=1

UUID=0cabc5e5:842d4baa:e3f6261b:a17a477a

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

[root@localhost myraid5]# mdadm -a /dev/md0 /dev/sde

mdadm: added /dev/sde

[root@localhost myraid5]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sde[3](S) sdd1[2] sdc1[1] sdb1[0]

41929344 blocks level 5, 64k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

mdadm: -d does not setthe mode, and so cannot be the first option.

[root@localhost myraid5]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 18:40:46 2013

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.4

Number Major Minor RaidDevice State

0 8 17 0 active sync/dev/sdb1

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

3 8 64 - spare /dev/sde#备用盘

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

[root@localhost myraid5]# mdadm -f /dev/md0 /dev/sdb1

mdadm: set/dev/sdb1faulty in/dev/md0

[root@localhost myraid5]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sde[3] sdd1[2] sdc1[1] sdb1[4](F)

41929344 blocks level 5, 64k chunk, algorithm 2 [3/2] [_UU]

[=======>.............] recovery = 37.0% (7762944/20964672) finish=1.0min speed=206190K/sec#恢复过程

unused devices: <none>

[root@localhost myraid5]#

[root@localhost myraid5]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 18:54:39 2013

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 29% complete

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.6

Number Major Minor RaidDevice State

3 8 64 0 spare rebuilding /dev/sde#重建RAID5

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

4 8 17 - faulty spare /dev/sdb1

[root@localhost myraid5]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 18:56:26 2013

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.8

Number Major Minor RaidDevice State

0 8 64 0 active sync/dev/sde#同步完成

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

3 8 17 - faulty spare /dev/sdb1#故障盘

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

root@localhost myraid5]# mdadm -r /dev/md0 /dev/sdb1

mdadm: hot removed /dev/sdb1

[root@localhost myraid5]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 3

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 19:00:19 2013

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.10

Number Major Minor RaidDevice State

0 8 64 0 active sync/dev/sde

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

[root@localhost myraid5]#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

[root@localhost ~]# mdadm -S /dev/md0 #停止RAID

mdadm: fail to stop array /dev/md0: Device or resource busy

Perhaps a running process, mounted filesystem or active volume group?

#上面的错误告诉我们阵列正在使用不能停止,我们得先卸载RAID再停止

[root@localhost ~]# umount /myraid5/ #卸载md0

[root@localhost ~]# mount

/dev/sda2on / typeext3 (rw)

proc on /proctypeproc (rw)

sysfs on /systypesysfs (rw)

devpts on /dev/ptstypedevpts (rw,gid=5,mode=620)

/dev/sda3on /datatypeext3 (rw)

/dev/sda1on /boottypeext3 (rw)

tmpfs on /dev/shmtypetmpfs (rw)

none on /proc/sys/fs/binfmt_misctypebinfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefstyperpc_pipefs (rw)

[root@localhost ~]# mdadm -S /dev/md0 #停止RAID

mdadm: stopped /dev/md0#停止完成

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

[root@localhost ~]# mdadm -E /dev/sdc1 #查看超级块信息

/dev/sdc1:

Magic : a92b4efc

Version : 0.90.00

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Array Size : 41929344 (39.99 GiB 42.94 GB)

Raid Devices : 3

Total Devices : 3

Preferred Minor : 0

Update Time : Thu Jun 27 19:14:02 2013

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Checksum : a3ba56ab - correct

Events : 10

Layout : left-symmetric

Chunk Size : 64K

Number Major Minor RaidDevice State

this 1 8 33 1 active sync/dev/sdc1

0 0 8 64 0 active sync/dev/sde

1 1 8 33 1 active sync/dev/sdc1

2 2 8 49 2 active sync/dev/sdd1

[root@localhost ~]#

[root@localhost ~]# mdadm -A /dev/md0 /dev/sde /dev/sdc1 /dev/sdd1 #开启RAID

mdadm: /dev/md0has been started with 3 drives.

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 17:32:19 2013

Raid Level : raid5

Array Size : 41929344 (39.99 GiB 42.94 GB)

Used Dev Size : 20964672 (19.99 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 3

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 19:09:56 2013

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 64K

UUID : 4f4529e0:15a7f327:0ec5e646:e202f88e

Events : 0.10

Number Major Minor RaidDevice State

0 8 64 0 active sync/dev/sde

1 8 33 1 active sync/dev/sdc1

2 8 49 2 active sync/dev/sdd1

[root@localhost ~]# cat /proc/mdstat #查看RAID

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sde[0] sdd1[2] sdc1[1]

41929344 blocks level 5, 64k chunk, algorithm 2 [3/3] [UUU]

unused devices: <none>

[root@localhost ~]# mount /dev/md0 /myraid5/ #挂载

[root@localhost ~]# ls /myraid5/ #查看

lost+found

[root@localhost ~]#

如果有配置文件(/etc/mdadm.conf)可使用命令mdadm -As /dev/md0。mdadm先检查mdadm.conf中的DEVICE信息,然后从每个设备上读取元数据信息,并检查是否和ARRAY信息一致,如果信息一致则启动阵列。如果没有配置/etc/mdadm.conf文件,而且又不知道阵列由那些磁盘组成,则可以使用命令--examine(或者其缩写-E)来检测当前的块设备上是否有阵列的元数据信息。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

[root@localhost ~]# umount /myraid5/ #卸载md0

[root@localhost ~]# mount #查看

/dev/sda2on / typeext3 (rw)

proc on /proctypeproc (rw)

sysfs on /systypesysfs (rw)

devpts on /dev/ptstypedevpts (rw,gid=5,mode=620)

/dev/sda3on /datatypeext3 (rw)

/dev/sda1on /boottypeext3 (rw)

tmpfs on /dev/shmtypetmpfs (rw)

none on /proc/sys/fs/binfmt_misctypebinfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefstyperpc_pipefs (rw)

[root@localhost ~]# mdadm -Ss /dev/md0

mdadm: stopped /dev/md0

[root@localhost ~]# mdadm --zero-superblock /dev/sd{b,c,d}1 /dev/sde

# --zero-superblock 加上该选项时,会判断如果该阵列是否包

# 含一个有效的阵列超级快,若有则将该超级块中阵列信息抹除。

[root@localhost ~]# rm -rf /etc/mdadm.conf #删除RAID配置文件

1

mk2fs -j -b 4096 -E stripe=16 /dev/md0# 设置时,需要用-E选项进行扩展

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

[root@localhost ~]# mdadm --monitor --mail=root@localhost --program=/root/md.sh --syslog --delay=300 /dev/md0 --daemonise

3305

[root@localhost ~]# mdadm -f /dev/md0 /dev/sdb

mdadm: set/dev/sdbfaulty in/dev/md0

[root@localhost ~]# mdadm -f /dev/md0 /dev/sd

sda sda1 sda2 sda3 sda4 sda5 sdb sdc sdd sde sdf sdg sdh sdi

[root@localhost ~]# mdadm -f /dev/md0 /dev/sdb

mdadm: set/dev/sdbfaulty in/dev/md0

[root@localhost ~]#

[root@localhost ~]#

[root@localhost ~]#

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 21:54:21 2013

Raid Level : raid5

Array Size : 41942912 (40.00 GiB 42.95 GB)

Used Dev Size : 20971456 (20.00 GiB 21.47 GB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 22:03:48 2013

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 27% complete

UUID : c7b98767:dbe2c944:442069fc:23ae34d9

Events : 0.4

Number Major Minor RaidDevice State

3 8 64 0 spare rebuilding /dev/sde

1 8 32 1 active sync/dev/sdc

2 8 48 2 active sync/dev/sdd

4 8 16 - faulty spare /dev/sdb

[root@localhost ~]# tail –f /var/log/messages

Jun 27 22:03:48 localhost kernel: --- rd:3 wd:2 fd:1

Jun 27 22:03:48 localhost kernel: disk 0, o:1, dev:sde

Jun 27 22:03:48 localhost kernel: disk 1, o:1, dev:sdc

Jun 27 22:03:48 localhost kernel: disk 2, o:1, dev:sdd

Jun 27 22:03:48 localhost kernel: md: syncing RAID array md0

Jun 27 22:03:48 localhost kernel: md: minimum _guaranteed_ reconstruction speed: 1000 KB/sec/disc.

Jun 27 22:03:48 localhost kernel: md: using maximum available idle IO bandwidth (but not morethan 200000 KB/sec) forreconstruction.

Jun 27 22:03:49 localhost kernel: md: using 128k window, over a total of 20971456 blocks.

Jun 27 22:03:48 localhost mdadm[3305]: RebuildStarted event detected on md device /dev/md0

Jun 27 22:03:49 localhost mdadm[3305]: Fail event detected on md device /dev/md0, component device /dev/sdb

[root@localhost ~]# mail

Mail version 8.1 6/6/93. Type ? forhelp.

"/var/spool/mail/root": 4 messages 4 new

>N 1 logwatch@localhost.l Wed Jun 12 03:37 43/1629"Logwatch for localhost.localdomain (Linux)"

N 2 logwatch@localhost.l Wed Jun 12 04:02 43/1629"Logwatch for localhost.localdomain (Linux)"

N 3 logwatch@localhost.l Thu Jun 27 17:58 43/1629"Logwatch for localhost.localdomain (Linux)"

N 4 root@localhost.localThu Jun 27 22:03 32/1255"Fail event on /dev/md0:localhost.localdomain"

& 4

Message 4:

From root@localhost.localdomain Thu Jun 27 22:03:49 2013

Date: Thu, 27 Jun 2013 22:03:49 +0800

From: mdadm monitoring <root@localhost.localdomain>

To: root@localhost.localdomain

Subject: Fail event on /dev/md0:localhost.localdomain

This is an automatically generated mail message from mdadm

running on localhost.localdomain

A Fail event had been detected on md device /dev/md0.

It could be related to component device /dev/sdb.

Faithfully yours, etc.

P.S. The /proc/mdstatfilecurrently contains the following:

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdd[2] sde[3] sdc[1] sdb[4](F)

41942912 blocks level 5, 64k chunk, algorithm 2 [3/2] [_UU]

[>....................] recovery = 0.9% (200064/20971456) finish=1.7min speed=200064K/sec

unused devices: <none>

&

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

mdadm -C /dev/md0-l5 -n3 /dev/sd[b-d] -x1 /dev/sde--size=1024000

# -- size单位为KB

[root@localhost ~]# mdadm -C /dev/md0 -l5 -n3 /dev/sd[b-d] -x1 /dev/sde --size=1024000

mdadm: array /dev/md0started.

root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 22:24:51 2013

Raid Level : raid5

Array Size : 2048000 (2000.34 MiB 2097.15 MB)

Used Dev Size : 1024000 (1000.17 MiB 1048.58 MB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 22:24:51 2013

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 73% complete

UUID : 78e766fb:776d62ee:d22de2dc:d5cf5bb9

Events : 0.1

Number Major Minor RaidDevice State

0 8 16 0 active sync/dev/sdb

1 8 32 1 active sync/dev/sdc

4 8 48 2 spare rebuilding /dev/sdd

3 8 64 - spare /dev/sde

[root@localhost ~]#

[root@localhost ~]# mdadm --grow /dev/md0 --size=2048000 #扩展大小

[root@localhost ~]# mdadm -D /dev/md0

/dev/md0:

Version : 0.90

Creation Time : Thu Jun 27 22:24:51 2013

Raid Level : raid5

Array Size : 4096000 (3.91 GiB 4.19 GB)

Used Dev Size : 2048000 (2000.34 MiB 2097.15 MB)

Raid Devices : 3

Total Devices : 4

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Thu Jun 27 22:28:34 2013

State : clean, resyncing

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 64K

Rebuild Status : 90% complete

UUID : 78e766fb:776d62ee:d22de2dc:d5cf5bb9

Events : 0.3

Number Major Minor RaidDevice State

0 8 16 0 active sync/dev/sdb

1 8 32 1 active sync/dev/sdc

2 8 48 2 active sync/dev/sdd

3 8 64 - spare /dev/sde

[root@localhost ~]#

(责任编辑:IT)

创建一个RAID 0设备:

(责任编辑:IT) |