|

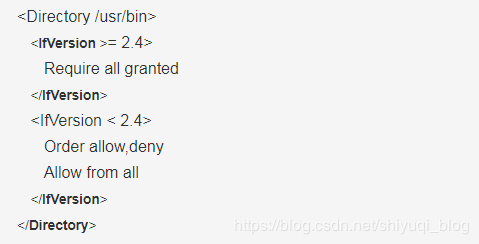

Centos建议最低配置: 控制节点:1个处理器,4 GB内存和5 GB存储 计算节点:1个处理器,2 GB内存和10 GB存储 一,环境准备: 以下区分数据库密码和openstack用户密码,可自行替换 密码 描述 RABBIT_PASS RabbitMQ用户openstack的密码 123456 数据库root的密码 KEYSTONE_DBPASS keystone的数据库密码 GLANCE_DBPASS glance的数据库密码 NOVA_DBPASS nova的数据库密码 PLACEMENT_DBPASS placement的数据库密码 NEUTRON_DBPASS neutron的数据库密码 DASH_DBPASS DASH的数据库密码 CINDER_DBPASS cinder的数据库密码 ADMIN_PASS admin用户密码 myuser_PASS myuser用户密码 GLANCE_PASS glance用户的密码 NOVA_PASS nova用户的密码 PLACEMENT_PASS placement用户的密码 NEUTRON_PASS neutron用户的密码 METADATA_SECRET 元数据代理的密码 CINDER_PASS cinder用户的密码 1.1网络环境 1.1.1控制节点 配置第一个接口作为管理接口: IP地址:10.0.0.11 网络掩码:255.255.255.0 默认网关:10.0.0.1 提供程序接口使用特殊配置,但未分配IP地址。将第二个接口配置为提供程序接口: 替换INTERFACE_NAME为实际的接口名称。例如, eth1或ens224。 vi /etc/sysconfig/network-scripts/ifcfg-INTERFACE_NAME DEVICE=INTERFACE_NAME TYPE=Ethernet ONBOOT=“yes” BOOTPROTO=“none” 将控制节点的主机名设置为controller。hostnamectl set-hostname controller vi /etc/hosts 文件增加以下内容: #controller 10.0.0.11 controller #compute 10.0.0.31 compute #block 10.0.0.41 block1 1.1.2计算节点 配置第一个接口作为管理接口: IP地址:10.0.0.31 网络掩码:255.255.255.0 默认网关:10.0.0.1 提供程序接口使用特殊配置,但未分配IP地址。将第二个接口配置为提供程序接口: 替换INTERFACE_NAME为实际的接口名称。例如, eth1 vi /etc/sysconfig/network-scripts/ifcfg-INTERFACE_NAME TYPE=Ethernet NAME=INTERFACE_NAME DEVICE=INTERFACE_NAME ONBOOT=yes BOOTPROTO=none IPADDR=10.0.0.11 PREFIX=24 GATEWAY=10.0.0.1 将计算节点的主机名设置为compute。 hostnamectl set-hostname compute vi /etc/hosts 文件增加以下内容: #controller 10.0.0.11 controller #compute 10.0.0.31 compute #block 10.0.0.41 block 1.1.3块存储节点(可选)如果要部署块存储服务,请配置一个额外的存储节点 配置IP地址 IP地址: 10.0.0.41 网络掩码: 255.255.255.0 默认网关: 10.0.0.1 同上编辑/etc/hosts文件 1.2 NTP网络时间协议 1.2.1控制节点 安装chrony服务 yum -y install chrony 编辑修改配置文件,替换NTP_SERVER为合适的更准确NTP服务器的主机名或IP地址(cn.ntp.org.cn)(ntp1.aliyun.com) vi /etc/chrony.conf server NTP_SERVER iburst allow 10.0.0.0/24 配置开机自动运行chrony,并重启服务 systemctl enable chronyd.service systemctl start chronyd.service 1.2.2其他节点 yum -y install chrony 修改配置文件 vi /etc/chrony server controller iburst 注释掉pool 2.debian.pool.ntp.org offline iburst 配置开机自动运行chrony,并重启服务 systemctl enable chronyd.service systemctl start chronyd.service 1.2.3验证 在控制节点上运行此命令: chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample ^* cn.ntp.org.cn 2 6 177 46 +17us[ -23us] +/- 68ms 在所有其他节点上运行相同的命令: chronyc sources 210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample ^* controller 3 9 377 421 +15us[ -87us] +/- 15ms 1.3安装openstack包(rocky版)(所有节点) 在RHEL上,下载并安装RDO存储库RPM以启用OpenStack存储库。 yum -y install https://rdoproject.org/repos/rdo-release.rpm CentOS extras默认包含存储库,因此只需安装软件包即可启用OpenStack存储库 yum -y install centos-release-openstack-rocky 升级所有节点上的包: yum -y upgrade 安装OpenStack客户端: yum -y install python-openstackclient 可选:RHEL和CentOS 默认启用SELinux。安装 openstack-selinux软件包以自动管理OpenStack服务的安全策略:(或者直接关闭selinux和firewalld) yum -y install openstack-selinux 1 2 笔者这里没有安装openstack-selinux,直接关闭了所有节点的selinux和firewalld #systemctl disable firewalld vim /etc/sysconfig/selinuxe SELINUX=disabled 建议重启所有节点以适用以上配置 #reboot 1.4安装SQL数据库(控制节点) yum -y install mariadb mariadb-server python2-PyMySQL 创建和编辑/etc/my.cnf.d/openstack.cnf (这个文件本身不存在) vi /etc/my.cnf.d/openstack.cnf [mysqld] bind-address = 10.0.0.11 (控制节点ip) default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 启动数据库服务并配置开机启动 systemctl enable mariadb.service systemctl start mariadb.service 运行mysql_secure_installation 脚本来保护数据库服务 mysql_secure_installation 也可以输入以下命令自动配置数据库密码为123456 echo -e “\nY\n123456\n123456\nY\nn\nY\nY\n” | mysql_secure_installation 1.5消息队列(控制节点) yum -y install rabbitmq-server 启动消息队列服务并将其配置为开机启动: systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service 添加openstack用户:(替换RABBIT_PASS为合适的密码。) rabbitmqctl add_user openstack RABBIT_PASS 允许用户进行配置,写入和读取访问 openstack: rabbitmqctl set_permissions openstack “." ".” “.*” 赋予openstack用户administrator rabbitmqctl set_user_tags openstack administrator 启用web插件 rabbitmq-plugins enable rabbitmq_management 重启服务 systemctl restart rabbitmq-server.service 查看运行状态 netstat -nltp |grep 5672 Web访问(测试) http://192.168.1.38:15672 1.6 memcached 缓存 yum -y install memcached python-memcached 修改配置文件(更改现有行 OPTIONS="-l 127.0.0.1,::1") vi /etc/sysconfig/memcached OPTIONS="-l 127.0.0.1,::1,controller" 启动Memcached服务并将其配置为开机启动: systemctl enable memcached.service systemctl start memcached.service 二,安装openstack服务 1,keystone 认证服务(控制节点) 1.1在安装和配置keystone之前,必须先创建keystone数据库 mysql -u root -p MariaDB [(none)]> CREATE DATABASE keystone; 对keystone数据库配置的适当访问权限(替换KEYSTONE_DBPASS为合适的密码) MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@‘localhost’ IDENTIFIED BY ‘KEYSTONE_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’%’ IDENTIFIED BY ‘KEYSTONE_DBPASS’; 退出数据库访问客户端 exit 安装软件包 yum -y install openstack-keystone httpd mod_wsgi 修改keystone配置文件(替换KEYSTONE_DBPASS为合适的密码) vi /etc/keystone/keystone.conf [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone #注释掉或删除connection该[database]部分中的任何其他选项 [token] provider = fernet 填充数据库: su -s /bin/sh -c “keystone-manage db_sync” keystone 建议进入keystone数据库查看是否成功生成表 初始化Fernet密钥存储库: keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone 引导认证服务:(替换ADMIN_PASS为管理用户的密码) 在Queens发布之前,keystone需要在两个独立的端口上运行,以适应Identity v2 API,该API通常在端口35357上运行管理服务。通过删除v2 API,keystone可以在同一端口上运行所有接口。 keystone-manage bootstrap --bootstrap-password ADMIN_PASS –bootstrap-admin-url http://controller:5000/v3/ –bootstrap-internal-url http://controller:5000/v3/ –bootstrap-public-url http://controller:5000/v3/ –bootstrap-region-id RegionOne 1.2配置Apache HTTP服务器(控制节点) vi /etc/httpd/conf/httpd.conf ServerName controller:80 创建/usr/share/keystone/wsgi-keystone.conf文件的链接: ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ 启动Apache HTTP服务并将其配置为开机启动: systemctl enable httpd.service systemctl start httpd.service 编辑一个脚本文件admin-openrc来设置环境变量(ADMIN_PASS为管理用户的密码) vi admin-openrc export OS_USERNAME=admin export OS_PASSWORD=ADMIN_PASS export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 运行脚本 . admin-openrc 1.3创建openstack域,项目,角色(控制节点) (虽然之前引导时keystone-manage bootstrap步骤中已存在“默认”域,但创建新域的正式方法是) (#openstack domain create --description “An Example Domain” example ) 创建service 项目: openstack project create --domain default --description “Service Project” service ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | Service Project | | domain_id | default | | enabled | True | | id | 24ac7f19cd944f4cba1d77469b2a73ed | | is_domain | False | | name | service | | parent_id | default | | tags | [] | ±------------±---------------------------------+ 建议:常规(非管理员)任务应该使用非特权项目和用户。例如以下创建myproject项目和myuser 用户。 创建myproject项目:(在为此项目创建其他用户时,请勿重复此步骤。) openstack project create --domain default --description “Demo Project” myproject ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | Demo Project | | domain_id | default | | enabled | True | | id | 231ad6e7ebba47d6a1e57e1cc07ae446 | | is_domain | False | | name | myproject | | parent_id | default | | tags | [] | ±------------±---------------------------------+ 创建myuser用户:(myuser_PASS,密码可自行设置) openstack user create --domain default --password-prompt myuser User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | aeda23aa78f44e859900e22c24817832 | | name | myuser | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ 创建myrole角色: openstack role create myrole ±----------±---------------------------------+ | Field | Value | ±----------±---------------------------------+ | domain_id | None | | id | 997ce8d05fc143ac97d83fdfb5998552 | | name | myrole | ±----------±---------------------------------+ 将用户myuser以myrole的角色添加到myproject项目 openstack role add --project myproject --user myuser myrole 1.4验证 取消设置的变量OS_AUTH_URL和OS_PASSWORD: unset OS_AUTH_URL OS_PASSWORD 作为admin用户,请求身份验证令牌: (密码ADMIN_PASS) openstack --os-auth-url http://controller:5000/v3 –os-project-domain-name Default --os-user-domain-name Default –os-project-name admin --os-username admin token issue Password: ±-----------±----------------------------------------------------------------+ | Field | Value | ±-----------±----------------------------------------------------------------+ | expires | 2016-02-12T20:14:07.056119Z | | id | gAAAAABWvi7_B8kKQD9wdXac8MoZiQldmjEO643d-e_j-XXq9AmIegIbA7UHGPv | | | atnN21qtOMjCFWX7BReJEQnVOAj3nclRQgAYRsfSU_MrsuWb4EDtnjU7HEpoBb4 | | | o6ozsA_NmFWEpLeKy0uNn_WeKbAhYygrsmQGA49dclHVnz-OMVLiyM9ws | | project_id | 343d245e850143a096806dfaefa9afdc | | user_id | ac3377633149401296f6c0d92d79dc16 | ±-----------±----------------------------------------------------------------+ 作为myuser用户,请求身份验证令牌:(密码自行设置myuser_PASS) openstack --os-auth-url http://controller:5000/v3 –os-project-domain-name Default --os-user-domain-name Default –os-project-name myproject --os-username myuser token issue Password: ±-----------±----------------------------------------------------------------+ | Field | Value | ±-----------±----------------------------------------------------------------+ | expires | 2016-02-12T20:15:39.014479Z | | id | gAAAAABWvi9bsh7vkiby5BpCCnc-JkbGhm9wH3fabS_cY7uabOubesi-Me6IGWW | | | yQqNegDDZ5jw7grI26vvgy1J5nCVwZ_zFRqPiz_qhbq29mgbQLglbkq6FQvzBRQ | | | JcOzq3uwhzNxszJWmzGC7rJE_H0A_a3UFhqv8M4zMRYSbS2YF0MyFmp_U | | project_id | ed0b60bf607743088218b0a533d5943f | | user_id | 58126687cbcc4888bfa9ab73a2256f27 | ±-----------±----------------------------------------------------------------+ 创建demo-openrc脚本文件(替换MYUSER_PASS为myuser的密码) vi demo-openrc export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=myproject export OS_USERNAME=myuser export OS_PASSWORD=MYUSER_PASS export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 运行脚本文件更改环境变量 . admin-openrc 请求身份验证令牌: openstack token issue ±-----------±----------------------------------------------------------------+ | Field | Value | ±-----------±----------------------------------------------------------------+ | expires | 2016-02-12T20:44:35.659723Z | | id | gAAAAABWvjYj-Zjfg8WXFaQnUd1DMYTBVrKw4h3fIagi5NoEmh21U72SrRv2trl | | | JWFYhLi2_uPR31Igf6A8mH2Rw9kv_bxNo1jbLNPLGzW_u5FC7InFqx0yYtTwa1e | | | eq2b0f6-18KZyQhs7F3teAta143kJEWuNEYET-y7u29y0be1_64KYkM7E | | project_id | 343d245e850143a096806dfaefa9afdc | | user_id | ac3377633149401296f6c0d92d79dc16 | ±-----------±----------------------------------------------------------------+ 2.安装glance镜像服务 2.1安装和配置映像服务之前,必须创建数据库 mysql -u root -p MariaDB [(none)]> CREATE DATABASE glance; 对glance数据库配置的适当访问权限 (替换GLANCE_DBPASS为合适的密码) MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@‘localhost’ IDENTIFIED BY ‘GLANCE_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’%’ IDENTIFIED BY ‘GLANCE_DBPASS’; 退出数据库访问客户端 exit 2.2创建glance用户:(用户密码GLANCE_PASS) openstack user create --domain default --password-prompt glance User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | 3f4e777c4062483ab8d9edd7dff829df | | name | glance | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ 将glance用户以admin的角色添加到service项目 openstack role add --project service --user glance admin 创建glance服务实体: openstack service create --name glance --description “OpenStack Image” image ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | OpenStack Image | | enabled | True | | id | 8c2c7f1b9b5049ea9e63757b5533e6d2 | | name | glance | | type | image | ±------------±---------------------------------+ 创建Image服务API端点: openstack endpoint create --region RegionOne image public http://controller:9292 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 340be3625e9b4239a6415d034e98aace | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 8c2c7f1b9b5049ea9e63757b5533e6d2 | | service_name | glance | | service_type | image | | url | http://controller:9292 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne image internal http://controller:9292 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | a6e4b153c2ae4c919eccfdbb7dceb5d2 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 8c2c7f1b9b5049ea9e63757b5533e6d2 | | service_name | glance | | service_type | image | | url | http://controller:9292 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne image admin http://controller:9292 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 0c37ed58103f4300a84ff125a539032d | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 8c2c7f1b9b5049ea9e63757b5533e6d2 | | service_name | glance | | service_type | image | | url | http://controller:9292 | ±-------------±---------------------------------+ 2.3安装和修改配置文件 yum -y install openstack-glance 修改api配置文件(注意GLANCE_DBPASS和GLANCE_PASS的区别) vi /etc/glance/glance-api.conf [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = GLANCE_PASS #注释掉或删除该[keystone_authtoken]部分中的任何其他选项 [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ 修改registry配置(在openstack S版被移除,注意GLANCE_DBPASS和GLANCE_PASS的区别) vi /etc/glance/glance-registry.conf [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = GLANCE_PASS #注释掉或删除该[keystone_authtoken]部分中的任何其他选项 [paste_deploy] flavor = keystone 填充glance数据库(忽略此输出中的任何提示将被弃用的消息) su -s /bin/sh -c “glance-manage db_sync” glance 建议进入glance数据库查看是否成功生成表 启动glance服务并将其配置为开机启动 systemctl enable openstack-glance-api.service openstack-glance-registry.service systemctl start openstack-glance-api.service openstack-glance-registry.service 3.安装nova计算服务 3.1在安装和配置nova服务之前,必须创建数据库 mysql -u root -p 创建nova_api,nova,nova_cell0,和placement数据库: MariaDB [(none)]> CREATE DATABASE nova_api; MariaDB [(none)]> CREATE DATABASE nova; MariaDB [(none)]> CREATE DATABASE nova_cell0; MariaDB [(none)]> CREATE DATABASE placement; 对数据库配置的适当访问权限(注意此处为NOVA_DBPASS和PLACEMENT_DBPASS,可以自行设置) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’%’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’%’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@’%’ IDENTIFIED BY ‘NOVA_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@‘localhost’ IDENTIFIED BY ‘PLACEMENT_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO ‘placement’@’%’ IDENTIFIED BY ‘PLACEMENT_DBPASS’; 退出数据库访问客户端。 exit 3.2创建nova服务,控制节点配置 设置环境变量 . admin-openrc 创建nova用户:(NOVA_PASS,可自行设置) openstack user create --domain default --password-prompt nova User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | 8a7dbf5279404537b1c7b86c033620fe | | name | nova | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ 将nova用户以admin的角色添加到service项目 openstack role add --project service --user nova admin 创建nova服务实体: openstack service create --name nova --description “OpenStack Compute” compute ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | OpenStack Compute | | enabled | True | | id | 060d59eac51b4594815603d75a00aba2 | | name | nova | | type | compute | ±------------±---------------------------------+ 创建Compute API服务端点: openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 ±-------------±------------------------------------------+ | Field | Value | ±-------------±------------------------------------------+ | enabled | True | | id | 3c1caa473bfe4390a11e7177894bcc7b | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | ±-------------±------------------------------------------+ openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1 ±-------------±------------------------------------------+ | Field | Value | ±-------------±------------------------------------------+ | enabled | True | | id | e3c918de680746a586eac1f2d9bc10ab | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | ±-------------±------------------------------------------+ openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1 ±-------------±------------------------------------------+ | Field | Value | ±-------------±------------------------------------------+ | enabled | True | | id | 38f7af91666a47cfb97b4dc790b94424 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 060d59eac51b4594815603d75a00aba2 | | service_name | nova | | service_type | compute | | url | http://controller:8774/v2.1 | ±-------------±------------------------------------------+ 创建Placement用户(PLACEMENT_PASS) openstack user create --domain default --password-prompt placement User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | fa742015a6494a949f67629884fc7ec8 | | name | placement | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ 将Placement用户以admin的角色添加到service项目 openstack role add --project service --user placement admin 在服务目录中创建Placement API: openstack service create --name placement --description “Placement API” placement ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | Placement API | | enabled | True | | id | 2d1a27022e6e4185b86adac4444c495f | | name | placement | | type | placement | ±------------±---------------------------------+ 创建Placement API服务端点: openstack endpoint create --region RegionOne placement public http://controller:8778 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 2b1b2637908b4137a9c2e0470487cbc0 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne placement internal http://controller:8778 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 02bcda9a150a4bd7993ff4879df971ab | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne placement admin http://controller:8778 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 3d71177b9e0f406f98cbff198d74b182 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | 2d1a27022e6e4185b86adac4444c495f | | service_name | placement | | service_type | placement | | url | http://controller:8778 | ±-------------±---------------------------------+ 3.3 控制节点安装nova并修改配置文件 3.3.1安装 yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api 3.3.2编辑/etc/nova/nova.conf (注意NOVA_DBPASS,PLACEMENT_DBPASS和NOVA_PASS,PLACEMENT_PASS的区别) vi /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata #仅启用计算和元数据API transport_url = rabbit://openstack:RABBIT_PASS@controller #RabbitMQ消息队列访问 my_ip = 10.0.0.11 #控制节点的管理接口IP地址 use_neutron = true #启用对网络服务的支持 firewall_driver = nova.virt.firewall.NoopFirewallDriver #使用nova防火墙驱动程序禁用Compute防火墙驱动 程序 [api_database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api [database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova [placement_database] connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS #注释掉该[keystone_authtoken] 部分中的任何其他选项。 [vnc] enabled = true server_listen = $my_ip server_proxyclient_address = $my_ip [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS #注释掉该[placement]部分中的任何其他选项。 3.3.3在配置文件中添加以下内容以启用对Placement API的访问 vi /etc/httpd/conf.d/00-nova-placement-api.conf  重启httpd服务 systemctl restart httpd 3.3.4填充数据库: su -s /bin/sh -c “nova-manage api_db sync” nova su -s /bin/sh -c “nova-manage cell_v2 map_cell0” nova su -s /bin/sh -c “nova-manage cell_v2 create_cell --name=cell1 --verbose” nova su -s /bin/sh -c “nova-manage db sync” nova 验证nova cell0和cell1是否正确注册: su -s /bin/sh -c “nova-manage cell_v2 list_cells” nova ±------±-------------------------------------±-----------------------------------±------------------------------------------------±---------+ | Name | UUID | Transport URL | Database Connection | Disabled | ±------±-------------------------------------±-----------------------------------±------------------------------------------------±---------+ | cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:@controller/nova_cell0 | False | | cell1 | 40aa6629-45c3-4b2d-953a-3e627733380e | rabbit://openstack:@controller | mysql+pymysql://nova:****@controller/nova | False | ±------±-------------------------------------±-----------------------------------±------------------------------------------------±---------+ 3.3.5重启并使服务开机运行 systemctl enable openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service systemctl start openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service 3.4配置计算节点 3.4.1安装 yum -y install openstack-nova-compute 3.4.2 修改配置文件 vi /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller my_ip = 10.0.0.31 #计算节点上管理网络接口的IP地址 use_neutron = true firewall_driver = nova.virt.firewall.NoopFirewallDriver [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS 确定计算节点是否支持硬件加速,如果输出结果为0,则不支持,需要修改nova配置文件 egrep -c ‘(vmx|svm)’ /proc/cpuinfo 0 vi /etc/nova/nova.conf [libvirt] virt_type = qemu 3.4.3启动nova-compute服务及其依赖,并将它们配置为开机自动启动: #systemctl enable libvirtd.service openstack-nova-compute.service #systemctl start libvirtd.service openstack-nova-compute.service 3.5将计算节点添加到单元数据库 在控制节点上运行以下命令 . admin-openrc 确认数据库中是否存在计算主机: openstack compute service list --service nova-compute ±—±-------------±--------±-----±--------±------±---------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | ±—±-------------±--------±-----±--------±------±---------------------------+ | 10 | nova-compute | compute | nova | enabled | up | 2019-04-25T07:42:12.000000 | ±—±-------------±--------±-----±--------±------±---------------------------+ 发现计算节点 su -s /bin/sh -c “nova-manage cell_v2 discover_hosts --verbose” nova Found 2 cell mappings. Skipping cell0 since it does not contain hosts. Getting computes from cell ‘cell1’: 40aa6629-45c3-4b2d-953a-3e627733380e Checking host mapping for compute host ‘localhost.localdomain’: 8cca78f7-7a00-4594-95af-d8835b7aceaf Creating host mapping for compute host ‘localhost.localdomain’: 8cca78f7-7a00-4594-95af-d8835b7aceaf Found 1 unmapped computes in cell: 40aa6629-45c3-4b2d-953a-3e627733380e 注意项: 添加新计算节点时,必须在控制节点上运行以下命令来注册这些新计算节点 nova-manage cell_v2 discover_hosts 或者修改配置文件 vi /etc/nova/nova.conf [scheduler] discover_hosts_in_cells_interval = 300 1 2 3 4 5 6 7 4.网络服务 管理网段为10.0.0.0/24,网关10.0.0.1 (管理网络) provider(注意名称)网段为在203.0.113.0/24,网关203.0.113.1 (物理网络) 4.1配置控制节点 4.1.1在安装和配置nova服务之前,必须创建数据库 mysql -u root -p MariaDB [(none)] CREATE DATABASE neutron; 对数据库neutron赋予适当访问权限 MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@‘localhost’ IDENTIFIED BY ‘NEUTRON_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’%’ IDENTIFIED BY ‘NEUTRON_DBPASS’; 退出数据库访问客户端 exit 4.1.2 创建用户,服务实体和api端点 . admin-openrc 创建neutron用户: (NEUTRON_PASS) openstack user create --domain default --password-prompt neutron User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | f2ba17f839c44f32ad4d12cb42d92368 | | name | neutron | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ 将用户neutron以admin的角色添加到service项目里 openstack role add --project service --user neutron admin 创建neutron服务实体: openstack service create --name neutron --description “OpenStack Networking” network ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | OpenStack Networking | | enabled | True | | id | 4b31d3a4bd1945f6b250e066f65d9d3f | | name | neutron | | type | network | ±------------±---------------------------------+ 创建网络服务API端点: openstack endpoint create --region RegionOne network public http://controller:9696 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 83aed72dc4794cd798a3f7834c8d2d98 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | 4b31d3a4bd1945f6b250e066f65d9d3f | | service_name | neutron | | service_type | network | | url | http://controller:9696 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne network internal http://controller:9696 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | e0663075f4b94bc987d3463b0317e683 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | 4b31d3a4bd1945f6b250e066f65d9d3f | | service_name | neutron | | service_type | network | | url | http://controller:9696 | ±-------------±---------------------------------+ openstack endpoint create --region RegionOne network admin http://controller:9696 ±-------------±---------------------------------+ | Field | Value | ±-------------±---------------------------------+ | enabled | True | | id | 1ee14289c9374dffb5db92a5c112fc4e | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | f71529314dab4a4d8eca427e701d209e | | service_name | neutron | | service_type | network | | url | http://controller:9696 | ±-------------±---------------------------------+ 4.1.3配置网络选项 安装组件 yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables 配置服务组件 vi /etc/neutron/neutron.conf [database] connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron #注释掉或删除connection该[database]部分中的任何其他选项 [DEFAULT] core_plugin = ml2 #启用模块化二层(ML2)插件 service_plugins = router #路由器服务 allow_overlapping_ips = True #允许重叠的IP地址 transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone notify_nova_on_port_status_changes = true #通知计算节点网络状态的更改 notify_nova_on_port_data_changes = true [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [nova] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = NOVA_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp 配置模块化二层(ML2)插件 vi /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers = flat,vlan,vxlan #启用flat,VLAN和VXLAN网络 tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population #启用Linux桥和二层填充机制 extension_drivers = port_security #启用端口安全性扩展驱动程序 [ml2_type_flat] flat_networks = provider #注意此处配置为provider,建立外部网络时,名称应为provider [ml2_type_vxlan] vni_ranges = 1:1000 #配置VXLAN网络标识符范围 [securitygroup] enable_ipset = true #启用ipset以提高安全组规则的效率 配置Linux桥代理 vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME #注意provider的名称统一,PROVIDER_INTERFACE_NAME为控制节点物理网络的接口地址,私有网络的网卡ip [vxlan] enable_vxlan = true local_ip = OVERLAY_INTERFACE_IP_ADDRESS #替换OVERLAY_INTERFACE_IP_ADDRESS为控制节点的管理IP地址,也可以理解为能与外部通信的ip,10.0.0.11 l2_population = true [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver 配置第3层代理 vi /etc/neutron/l3_agent.ini [DEFAULT] interface_driver = linuxbridge 配置DHCP代理 vi /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true 配置元数据代理 vi /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET 配置计算服务以便使用网络服务 (控制节点) vi /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET 网络服务初始化脚本需要一个/etc/neutron/plugin.ini指向ML2插件配置文件的链接 /etc/neutron/plugins/ml2/ml2_conf.ini。如果此链接不存在,请创建它: ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini 填充数据库: su -s /bin/sh -c “neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron 重新启动nova-api服务: systemctl restart openstack-nova-api.service 启动网络服务并将其配置为开机启动 systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service 启用并开机启动3层服务 systemctl enable neutron-l3-agent.service systemctl start neutron-l3-agent.service 4.2配置计算节点 4.2.1 安装 yum -y install openstack-neutron-linuxbridge ebtables ipset 4.2.2 修改配置文件 vi /etc/neutron/neutron.conf #[database]部分中,注释掉任何connection选项,因为计算节点不直接访问数据库。 [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS #注释掉[keystone_authtoken]部分中的任何其他选项 [oslo_concurrency] lock_path = /var/lib/neutron/tmp 配置linuxbridge_agent vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME #计算节点物理网络接口的IP地址,私有网络网卡ip [vxlan] enable_vxlan = true local_ip = OVERLAY_INTERFACE_IP_ADDRESS #替换OVERLAY_INTERFACE_IP_ADDRESS为计算节点的管理IP地址,能连接外网的ip10.0.0.31 l2_population = true [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver 配置计算服务以使用网络服务(计算节点) vi /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS 重新启动计算服务: systemctl restart openstack-nova-compute.service 启动Linux网桥代理并将其配置为开机启动 systemctl enable neutron-linuxbridge-agent.service systemctl start neutron-linuxbridge-agent.service 4.3验证(控制节点) . admin-openrc openstack extension list --network ±----------------------------------------------------------------------------------------------------------------------------------------±-------------------------------±---------------------------------------------------------------------------------------------------------------------------------------------------------+ | Name | Alias | Description | ±----------------------------------------------------------------------------------------------------------------------------------------±-------------------------------±---------------------------------------------------------------------------------------------------------------------------------------------------------+ | Default Subnetpools | default-subnetpools | Provides ability to mark and use a subnetpool as the default. | | Availability Zone | availability_zone | The availability zone extension. | | Network Availability Zone | network_availability_zone | Availability zone support for network. | | Auto Allocated Topology Services | auto-allocated-topology | Auto Allocated Topology Services. | | Neutron L3 Configurable external gateway mode | ext-gw-mode | Extension of the router abstraction for specifying whether SNAT should occur on the external gateway | | Port Binding | binding | Expose port bindings of a virtual port to external application | | agent | agent | The agent management extension. | | Subnet Allocation | subnet_allocation | Enables allocation of subnets from a subnet pool | | L3 Agent Scheduler | l3_agent_scheduler | Schedule routers among l3 agents | | Neutron external network | external-net | Adds external network attribute to network resource. | | Tag support for resources with standard attribute: subnet, trunk, router, network, policy, subnetpool, port, security_group, floatingip | standard-attr-tag | Enables to set tag on resources with standard attribute. | | Neutron Service Flavors | flavors | Flavor specification for Neutron advanced services. | | Network MTU | net-mtu | Provides MTU attribute for a network resource. | | Network IP Availability | network-ip-availability | Provides IP availability data for each network and subnet. | | Quota management support | quotas | Expose functions for quotas management per tenant | | If-Match constraints based on revision_number | revision-if-match | Extension indicating that If-Match based on revision_number is supported. | | Availability Zone Filter Extension | availability_zone_filter | Add filter parameters to AvailabilityZone resource | | HA Router extension | l3-ha | Adds HA capability to routers. | | Filter parameters validation | filter-validation | Provides validation on filter parameters. | | Multi Provider Network | multi-provider | Expose mapping of virtual networks to multiple physical networks | | Quota details management support | quota_details | Expose functions for quotas usage statistics per project | | Address scope | address-scope | Address scopes extension. | | Neutron Extra Route | extraroute | Extra routes configuration for L3 router | | Network MTU (writable) | net-mtu-writable | Provides a writable MTU attribute for a network resource. | | Empty String Filtering Extension | empty-string-filtering | Allow filtering by attributes with empty string value | | Subnet service types | subnet-service-types | Provides ability to set the subnet service_types field | | Neutron Port MAC address regenerate | port-mac-address-regenerate | Network port MAC address regenerate | | Resource timestamps | standard-attr-timestamp | Adds created_at and updated_at fields to all Neutron resources that have Neutron standard attributes. | | Provider Network | provider | Expose mapping of virtual networks to physical networks | | Neutron Service Type Management | service-type | API for retrieving service providers for Neutron advanced services | | Router Flavor Extension | l3-flavors | Flavor support for routers. | | Port Security | port-security | Provides port security | | Neutron Extra DHCP options | extra_dhcp_opt | Extra options configuration for DHCP. For example PXE boot options to DHCP clients can be specified (e.g. tftp-server, server-ip-address, bootfile-name) | | Port filtering on security groups | port-security-groups-filtering | Provides security groups filtering when listing ports | | Resource revision numbers | standard-attr-revisions | This extension will display the revision number of neutron resources. | | Pagination support | pagination | Extension that indicates that pagination is enabled. | | Sorting support | sorting | Extension that indicates that sorting is enabled. | | security-group | security-group | The security groups extension. | | DHCP Agent Scheduler | dhcp_agent_scheduler | Schedule networks among dhcp agents | | Floating IP Port Details Extension | fip-port-details | Add port_details attribute to Floating IP resource | | Router Availability Zone | router_availability_zone | Availability zone support for router. | | RBAC Policies | rbac-policies | Allows creation and modification of policies that control tenant access to resources. | | standard-attr-description | standard-attr-description | Extension to add descriptions to standard attributes | | IP address substring filtering | ip-substring-filtering | Provides IP address substring filtering when listing ports | | Neutron L3 Router | router | Router abstraction for basic L3 forwarding between L2 Neutron networks and access to external networks via a NAT gateway. | | Allowed Address Pairs | allowed-address-pairs | Provides allowed address pairs | | Port Bindings Extended | binding-extended | Expose port bindings of a virtual port to external application | | project_id field enabled | project-id | Extension that indicates that project_id field is enabled. | | Distributed Virtual Router | dvr | Enables configuration of Distributed Virtual Routers. | ±----------------------------------------------------------------------------------------------------------------------------------------±-------------------------------±---------------------------------------------------------------------------------------------------------------------------------------------------------+ openstack network agent list ±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+ | ID | Agent Type | Host | Availability Zone | Alive | State | Binary | ±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+ | 14c0e433-2c4b-4c3f-827e-b2923f9865cc | L3 agent | controller | nova | ? | UP | neutron-l3-agent | | 2cae088a-d493-45ff-8845-406d0bf33b6d | Metadata agent | controller | None | ? | UP | neutron-metadata-agent | | 3a2b193a-b6e7-43c4-97e2-6a47508ce707 | DHCP agent | controller | nova | ? | UP | neutron-dhcp-agent | | 8f94ade1-fe3b-49dd-a117-a7bd01de172d | Linux bridge agent | controller | None | ? | UP | neutron-linuxbridge-agent | | b49588ce-f3a9-40cd-bb69-9cba054be2b7 | Linux bridge agent | compute | None | ? | UP | neutron-linuxbridge-agent | ±-------------------------------------±-------------------±-----------±------------------±------±------±--------------------------+ 5 安装dashboard yum -y install openstack-dashboard 5.1修改配置文件 vi /etc/openstack-dashboard/local_settings OPENSTACK_HOST = “controller” ALLOWED_HOSTS = [‘example1’, ‘example2’] #配置允许访问dashboard管理页面的主机,自行替换为ip或域名,*为所有地址 CACHES = { ‘default’: { ‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’, ‘LOCATION’: ‘controller:11211’, }, } #配置memcached会话存储服务:注释掉其他会话存储配置。 OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True OPENSTACK_API_VERSIONS = { “identity”: 3, “image”: 2, “volume”: 2, } #配置api版本 OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “Default” #通过dashboard创建的用户域为Default OPENSTACK_KEYSTONE_DEFAULT_ROLE = “myrole” #通过dashboard创建的用户角色为myrole 以下选项自行挑选修改为true,以便在页面上使用,如为False,页面不显示,可以使用默认设置 OPENSTACK_NEUTRON_NETWORK = { … ‘enable_router’: True, ‘enable_quotas’: True, ‘enable_distributed_router’: True, ‘enable_ha_router’: True, ‘enable_lb’: True, ‘enable_firewall’: True, ‘enable_vpn’: True, ‘enable_fip_topology_check’: True, } TIME_ZONE = “UTC” #建议UTC vi /etc/httpd/conf.d/openstack-dashboard.conf 在WSGISocketPrefix run/wsgi下面添加一行 WSGIApplicationGroup %{GLOBAL} 5.2重新启动服务 systemctl restart httpd.service memcached.service 5.3 验证 http://controller/dashboard/auth/login 域 default 用户名 admin 密码 ADMIN_PASS 注意需要域名解析controller,才能连接,或者将controller替换为ip地址 6 安装cinde块存储服务 安装配置控制节点! 6.1 创建数据库 (CINDER_DBPASS) mysql -u root -p MariaDB [(none)]> CREATE DATABASE cinder; 赋予访问权限 MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@‘localhost’ IDENTIFIED BY ‘CINDER_DBPASS’; MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’%’ IDENTIFIED BY ‘CINDER_DBPASS’; 退出数据库访问客户端。 exit 6.2创建cinder用户 (CINDER_PASS) . admin-openrc openstack user create --domain default --password-prompt cinder User Password: Repeat User Password: ±--------------------±---------------------------------+ | Field | Value | ±--------------------±---------------------------------+ | domain_id | default | | enabled | True | | id | 9d7e33de3e1a498390353819bc7d245d | | name | cinder | | options | {} | | password_expires_at | None | ±--------------------±---------------------------------+ openstack role add --project service --user cinder admin 6.3创建cinderv2和cinderv3服务实体,块存储服务需要两个服务实体 openstack service create --name cinderv2 --description “OpenStack Block Storage” volumev2 ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | OpenStack Block Storage | | enabled | True | | id | eb9fd245bdbc414695952e93f29fe3ac | | name | cinderv2 | | type | volumev2 | ±------------±---------------------------------+ openstack service create --name cinderv3 --description “OpenStack Block Storage” volumev3 ±------------±---------------------------------+ | Field | Value | ±------------±---------------------------------+ | description | OpenStack Block Storage | | enabled | True | | id | ab3bbbef780845a1a283490d281e7fda | | name | cinderv3 | | type | volumev3 | ±------------±---------------------------------+ 6.4创建块存储服务API端点 openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | 513e73819e14460fb904163f41ef3759 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | ±-------------±-----------------------------------------+ openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | 6436a8a23d014cfdb69c586eff146a32 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | ±-------------±-----------------------------------------+ openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | e652cf84dd334f359ae9b045a2c91d96 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | ±-------------±-----------------------------------------+ openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | 03fa2c90153546c295bf30ca86b1344b | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | ±-------------±-----------------------------------------+ openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | 94f684395d1b41068c70e4ecb11364b2 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | ±-------------±-----------------------------------------+ openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%(project_id)s ±-------------±-----------------------------------------+ | Field | Value | ±-------------±-----------------------------------------+ | enabled | True | | id | 4511c28a0f9840c78bacb25f10f62c98 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | ±-------------±-----------------------------------------+ 6.5安装和配置组件 yum -y install openstack-cinder vi /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone my_ip = 10.0.0.11 [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = CINDER_PASS #注释掉[keystone_authtoken]部分中的任何其他选项 。 [oslo_concurrency] lock_path = /var/lib/cinder/tmp 填充块存储数据库 su -s /bin/sh -c “cinder-manage db sync” cinder 6.6配置计算以使用块存储(控制节点) vi /etc/nova/nova.conf [cinder] os_region_name = RegionOne 6.7重启服务 systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service 安装配置存储节点! 6.8 安装包 yum -y install lvm2 device-mapper-persistent-data #一些发行版默认包含LVM。 systemctl enable lvm2-lvmetad.service systemctl start lvm2-lvmetad.service pvcreate /dev/sdb #Physical volume “/dev/sdb” successfully created vgcreate cinder-volumes /dev/sdb #Volume group “cinder-volumes” successfully created vi /etc/lvm/lvm.conf (在devices里添加filter过滤器) devices { filter = [ “a/sdb/”, “r/.*/”] #如果存储节点在操作系统磁盘上使用LVM,则还必须将关联的设备添加到过滤器。例如,如果/dev/sda设备包含操作系统: filter = [ “a/sda/”, “a/sdb/”, “r/.*/”] 6.9安装和配置组件 yum -y install openstack-cinder targetcli python-keystone vi /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS #替换MANAGEMENT_INTERFACE_IP_ADDRESS为存储节点上管理网络接口的IP地址 enabled_backends = lvm glance_api_servers = http://controller:9292 [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = CINDER_PASS #注释掉[keystone_authtoken]部分中的任何其他选项 [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = cinder-volumes iscsi_protocol = iscsi iscsi_helper = lioadm #如果[lvm]不存在,添加一个 [oslo_concurrency] lock_path = /var/lib/cinder/tmp 重启并使服务开机启动 systemctl enable openstack-cinder-volume.service target.service systemctl start openstack-cinder-volume.service target.service (责任编辑:IT) |