Ubuntu上使用Hadoop2.x七HDFScluster使用

时间:2014-12-25 22:14 来源:linux.it.net.cn 作者:IT

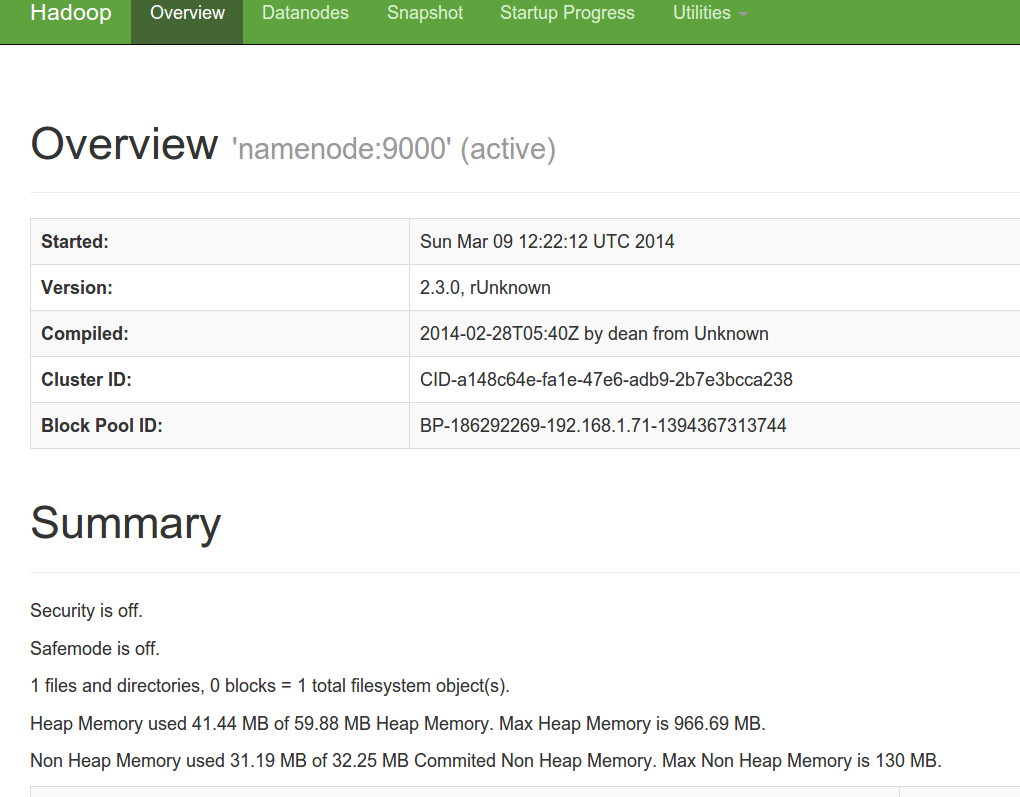

namenode管理站点

首先namenode有一个web站点,默认端口号是50070, 下面是我的截屏:

至少说明namenode服务启动正常了。

日志

网站上Utilities->Log里面可以看到namenode的日志信息。包括启动的时候Java的版本,参数等等。

也可以看到复制文件t.txt的操作:

1.2014-03-10 02:43:59,676 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocateBlock: /test/t.txt._COPYING_. BP-186292269-192.168.1.71-1394367313744blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]}

2.2014-03-10 02:44:00,147 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.75:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

3.2014-03-10 02:44:00,150 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.73:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

4.2014-03-10 02:44:00,153 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.74:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

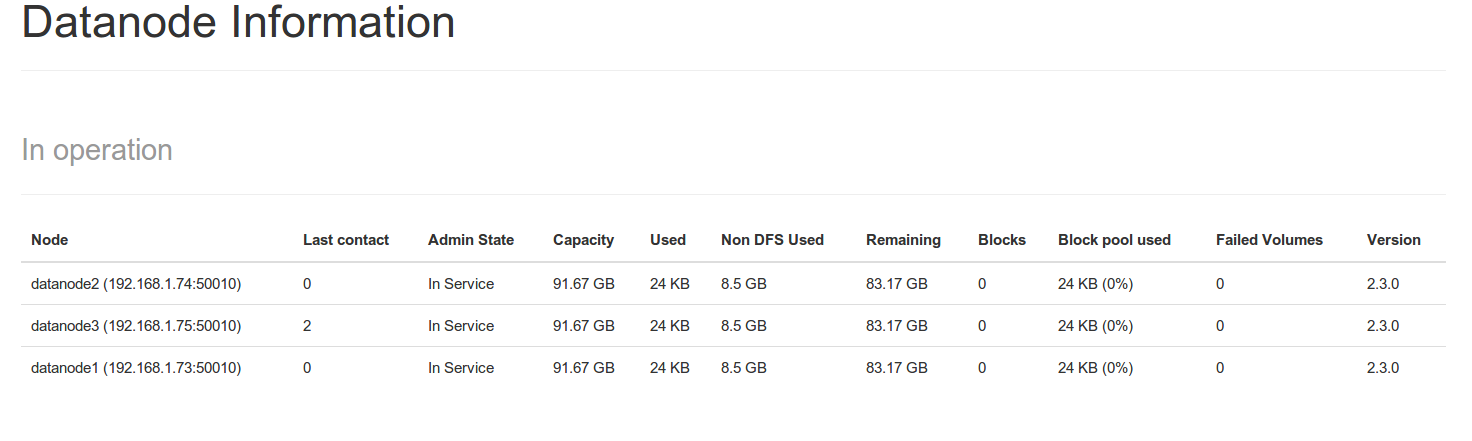

为什么只看到datanode1?

原因是datanode2和datanode3中的/etc/hosts中忘记添加配置,加完后再次启动服务,很快网站上看到3个datanode了。

File System Shell

直接在namenode server上运行下面的命令:

1.hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/

2.Found 1 items

3.drwxr-xr-x - hduser supergroup 0 2014-03-10 02:30 hdfs://namenode:9000/test

可以看到,已经成功创建了test目录。

官方文档参考:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/FileSystemShell.html

查找帮助的方式

hdfs --help 可以查看总的命令

查看dfs下面具体的命令用法,看下面的例子:

1.hduser@namenode:~$ hdfs dfs -help cp

2.-cp [-f] [-p] <src> ... <dst>: Copy files that match the file pattern <src> to a

3.destination. When copying multiple files, the destination

4.must be a directory. Passing -p preserves access and

5.modification times, ownership and the mode. Passing -f

6.overwrites the destination if it already exists.

复制本地文件到hdfs中,注意src要用file:///前缀

1.hdfs dfs -cp file:///home/hduser/t.txt hdfs://namenode:9000/test/

这里t.txt是一个本地文件。

1.hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/test

2.Found 1 items

3.-rw-r--r-- 3 hduser supergroup 4 2014-03-10 02:44hdfs://namenode:9000/test/t.txt

(责任编辑:IT)

namenode管理站点首先namenode有一个web站点,默认端口号是50070, 下面是我的截屏:

至少说明namenode服务启动正常了。

日志网站上Utilities->Log里面可以看到namenode的日志信息。包括启动的时候Java的版本,参数等等。也可以看到复制文件t.txt的操作:

1.2014-03-10 02:43:59,676 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocateBlock: /test/t.txt._COPYING_. BP-186292269-192.168.1.71-1394367313744blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]}

2.2014-03-10 02:44:00,147 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.75:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

3.2014-03-10 02:44:00,150 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.73:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

4.2014-03-10 02:44:00,153 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.74:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

为什么只看到datanode1?原因是datanode2和datanode3中的/etc/hosts中忘记添加配置,加完后再次启动服务,很快网站上看到3个datanode了。

File System Shell直接在namenode server上运行下面的命令:

1.hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/

2.Found 1 items

3.drwxr-xr-x - hduser supergroup 0 2014-03-10 02:30 hdfs://namenode:9000/test

可以看到,已经成功创建了test目录。

官方文档参考:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/FileSystemShell.html 查找帮助的方式 hdfs --help 可以查看总的命令 查看dfs下面具体的命令用法,看下面的例子:

1.hduser@namenode:~$ hdfs dfs -help cp

2.-cp [-f] [-p] <src> ... <dst>: Copy files that match the file pattern <src> to a

3.destination. When copying multiple files, the destination

4.must be a directory. Passing -p preserves access and

5.modification times, ownership and the mode. Passing -f

6.overwrites the destination if it already exists.

1.hdfs dfs -cp file:///home/hduser/t.txt hdfs://namenode:9000/test/

1.hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/test

2.Found 1 items

3.-rw-r--r-- 3 hduser supergroup 4 2014-03-10 02:44hdfs://namenode:9000/test/t.txt

(责任编辑:IT) |